Guest Post: Nathan Moynihan on Amplitudes for Astrophysicists

As someone who sits at Richard Feynman’s old desk, I take Feynman diagrams very seriously. They are a very convenient and powerful way of answering a certain kind of important physical question: given some set of particles coming together to interact, what is the probability that they will evolve into some specific other set of particles?

Unfortunately, actual calculations with Feynman diagrams can get unwieldy. The answers they provide are only approximate (though the approximations can be very good), and making the approximations just a little more accurate can be a tremendous amount of work. Enter the “amplitudes program,” a set of techniques for calculating these scattering probabilities more directly, without adding together a barrel full of Feynman diagrams. This isn’t my own area, but we’ve had guest posts from Lance Dixon and Jaroslav Trnka about this subject a while back.

But are these heady ideas just brain candy for quantum field theorists, or can they be applied more widely? A very interesting new paper just came out that argued that even astrophysicists — who usually deal with objects a lot bigger than a few colliding particles — can put amplitude technology to good use! And we’re very fortunate to have a guest post on the subject by one of the authors, Nathan Moynihan. Nathan is a grad student at the University of Cape Town, studying under Jeff Murugan and Amanda Weltman, who works on quantum field theory, gravity, and information. This is a great introduction to the application of cutting-edge mathematical physics to some (relatively) down-to-Earth phenomena.

But are these heady ideas just brain candy for quantum field theorists, or can they be applied more widely? A very interesting new paper just came out that argued that even astrophysicists — who usually deal with objects a lot bigger than a few colliding particles — can put amplitude technology to good use! And we’re very fortunate to have a guest post on the subject by one of the authors, Nathan Moynihan. Nathan is a grad student at the University of Cape Town, studying under Jeff Murugan and Amanda Weltman, who works on quantum field theory, gravity, and information. This is a great introduction to the application of cutting-edge mathematical physics to some (relatively) down-to-Earth phenomena.

In a recent paper, my collaborators and I (Daniel Burger, Raul Carballo-Rubio, Jeff Murugan and Amanda Weltman) make a case for applying modern methods in scattering amplitudes to astrophysical and cosmological scenarios. In this post, I would like to explain why I think this is interesting, and why you, if you’re an astrophysicist or cosmologist, might want to use the techniques we have outlined.

In a scattering experiment, objects of known momentum ![]() are scattered from a localised target, with various possible outcomes predicted by some theory. In quantum mechanics, the probability of a particular outcome is given by the square of the scattering amplitude, a complex number that can be derived directly from the theory. Scattering amplitudes are the central quantities of interest in (perturbative) quantum field theory, and in the last 15 years or so, there has been something of a minor revolution surrounding the tools used to calculate these quantities (partially inspired by the introduction of twistors into string theory). In general, a particle theorist will make a perturbative expansion of the path integral of her favorite theory into Feynman diagrams, and mechanically use the Feynman rules of the theory to calculate each diagram’s contribution to the final amplitude. This approach works perfectly well, although the calculations are often tough, depending on the theory.

are scattered from a localised target, with various possible outcomes predicted by some theory. In quantum mechanics, the probability of a particular outcome is given by the square of the scattering amplitude, a complex number that can be derived directly from the theory. Scattering amplitudes are the central quantities of interest in (perturbative) quantum field theory, and in the last 15 years or so, there has been something of a minor revolution surrounding the tools used to calculate these quantities (partially inspired by the introduction of twistors into string theory). In general, a particle theorist will make a perturbative expansion of the path integral of her favorite theory into Feynman diagrams, and mechanically use the Feynman rules of the theory to calculate each diagram’s contribution to the final amplitude. This approach works perfectly well, although the calculations are often tough, depending on the theory.

Astrophysicists, on the other hand, are often not concerned too much with quantum field theories, preferring to work with the classical theory of general relativity. However, it turns out that you can, in fact, do the same thing with general relativity: perturbatively write down the Feynman diagrams and calculate scattering amplitudes, at least to first or second order. One of the simplest scattering events you can imagine in pure gravity is that of two gravitons scattering off one another: you start with two gravitons, they interact, you end up with two gravitons. It turns out that you can calculate this using Feynman diagrams, and the answer turns out to be strikingly simple, being barely one line.

The calculation, on the other hand, is utterly vicious. An unfortunate PhD student by the name of Walter G Wesley was given this monstrous task in 1963, and he found that to calculate the amplitude meant evaluating over 500 terms, which as you can imagine took the majority of his PhD to complete (no Mathematica!). The answer, in the end, is breathtakingly simple. In the centre of mass frame, the cross section for this amplitude is:

![]()

Where G is Newton’s constant, E is the energy of the gravitons, and ![]() is the scattering angle. The fact that they are so cumbersome has meant that many in the astrophysics community may eschew calculations of this type.

is the scattering angle. The fact that they are so cumbersome has meant that many in the astrophysics community may eschew calculations of this type.

However, the fact that the answer is so simple implies that there may be an easier route to calculation than evaluating all 500 diagrams. Indeed, this is exactly what we have tried to allude to in our paper: using the methods we have outlined, this calculation can be done on half a page and with little effort.

The technology that we introduce can be summed up as follows and should be easily recognised as common tools present in any physicists arsenal: a change of variables (for the more mathematically inclined reader, a change of representation), recursion relations and complex analysis. Broadly speaking, the idea is to take the basic components of Feynman diagrams: momentum vectors and polarisation vectors (or tensors) and to represent them as spinors, objects that are inherently complex in nature (they are elements of a complex vector space). Once we do that, we can utilise the transformation rules of spinors to simplify calculations. This simplifies things a bit, but we can do better.

The next trick is to observe that Feynman diagrams all have one common feature: they contain real singularities (meaning the amplitude blows up) wherever there are physical internal lines. These singularities usually show up as poles, functions that behave like ![]() . Typically, an internal line might contribute a factor like

. Typically, an internal line might contribute a factor like ![]() , which obviously blows up around

, which obviously blows up around ![]() .

.

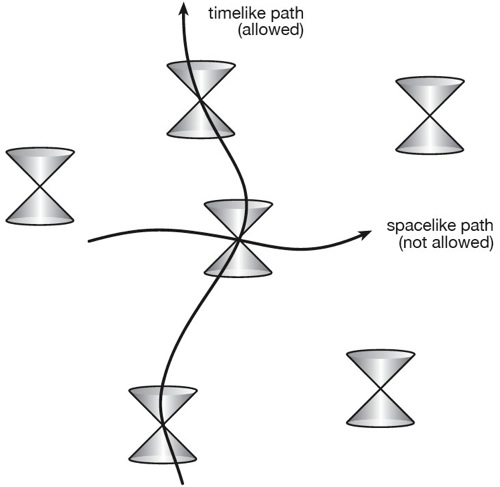

We normally make ourselves feel better by taking these internal lines to be virtual, meaning they don’t correspond to physical processes that satisfy the energy-momentum condition ![]() and thus never blow up. In contrast to this, the modern formulation insists that internal lines do satisfy the condition, but that the pole is complex. Now that we have complex poles, we can utilise the standard tools of complex analysis, which tells us that if you know about the poles and residues, you know everything. For this to work, we are required to insist that at least some of external momentum is complex, since the internal momentum depends on the external momentum. Thankfully, we can do this in such a way that momentum conservation holds and that the square of the momentum is still physical.

and thus never blow up. In contrast to this, the modern formulation insists that internal lines do satisfy the condition, but that the pole is complex. Now that we have complex poles, we can utilise the standard tools of complex analysis, which tells us that if you know about the poles and residues, you know everything. For this to work, we are required to insist that at least some of external momentum is complex, since the internal momentum depends on the external momentum. Thankfully, we can do this in such a way that momentum conservation holds and that the square of the momentum is still physical.

The final ingredient we need are known as the BCFW recursion relations, an indispensable tool used by the amplitudes community developed by Britto, Cachazo, Feng and Witten in 2005. Roughly speaking, these relations tell us that we can turn a complex, singular, on-shell amplitude of any number of particles into a product of 3-particle amplitudes glued together by poles. Essentially, this means we can treat amplitudes like lego bricks and stick them together in an intelligent way in order to construct a really-difficult-to-compute amplitude from some relatively simple ones.

In the paper, we show how this can be achieved using the example of scattering a graviton off a scalar. This interaction is interesting since it’s a representation of a gravitational wave being ‘bent’ by the gravitational field of a massive object like a star. We show, in a couple of pages, that the result calculated using these methods exactly corresponds with what you would calculate using general relativity.

If you’re still unconvinced by the utility of what I’ve outlined, then do look out for the second paper in the series, hopefully coming in the not too distant future. Whether you’re a convert or not, our hope is that these methods might be useful to the astrophysics and cosmology communities in the future, and I would welcome any comments from any members of those communities.

Guest Post: Nathan Moynihan on Amplitudes for Astrophysicists Read More »