This year’s Sakurai Prize of the American Physical Society, one of the most prestigious awards in theoretical particle physics, has been awarded to Zvi Bern, Lance Dixon, and David Kosower “for pathbreaking contributions to the calculation of perturbative scattering amplitudes, which led to a deeper understanding of quantum field theory and to powerful new tools for computing QCD processes.” An “amplitude” is the fundamental thing one wants to calculate in quantum mechanics — the probability that something happens (like two particles scattering) is given by the amplitude squared. This is one of those topics that is absolutely central to how modern particle physics is done, but it’s harder to explain the importance of a new set of calculational techniques than something marketing-friendly like finding a new particle. Nevertheless, the field pioneered by Bern, Dixon, and Kosower made a splash in the news recently, with Natalie Wolchover’s masterful piece in Quanta about the “Amplituhedron” idea being pursued by Nima Arkani-Hamed and collaborators. (See also this recent piece in Scientific American, if you subscribe.)

This year’s Sakurai Prize of the American Physical Society, one of the most prestigious awards in theoretical particle physics, has been awarded to Zvi Bern, Lance Dixon, and David Kosower “for pathbreaking contributions to the calculation of perturbative scattering amplitudes, which led to a deeper understanding of quantum field theory and to powerful new tools for computing QCD processes.” An “amplitude” is the fundamental thing one wants to calculate in quantum mechanics — the probability that something happens (like two particles scattering) is given by the amplitude squared. This is one of those topics that is absolutely central to how modern particle physics is done, but it’s harder to explain the importance of a new set of calculational techniques than something marketing-friendly like finding a new particle. Nevertheless, the field pioneered by Bern, Dixon, and Kosower made a splash in the news recently, with Natalie Wolchover’s masterful piece in Quanta about the “Amplituhedron” idea being pursued by Nima Arkani-Hamed and collaborators. (See also this recent piece in Scientific American, if you subscribe.)

I thought about writing up something about scattering amplitudes in gauge theories, similar in spirit to the post on effective field theory, but quickly realized that I wasn’t nearly familiar enough with the details to do a decent job. And you’re lucky I realized it, because instead I asked Lance Dixon if he would contribute a guest post. Here’s the result, which sets a new bar for guest posts in the physics blogosphere. Thanks to Lance for doing such a great job.

—————————————————————-

“Amplitudes: The untold story of loops and legs”

Sean has graciously offered me a chance to write something about my research on scattering amplitudes in gauge theory and gravity, with my longtime collaborators, Zvi Bern and David Kosower, which has just been recognized by the Sakurai Prize for theoretical particle physics.

In short, our work was about computing things that could in principle be computed with Feynman diagrams, but it was much more efficient to use some general principles, instead of Feynman diagrams. In one sense, the collection of ideas might be considered “just tricks”, because the general principles have been around for a long time. On the other hand, they have provided results that have in turn led to new insights about the structure of gauge theory and gravity. They have also produced results for physics processes at the Large Hadron Collider that have been unachievable by other means.

The great Russian physicist, Lev Landau, a contemporary of Richard Feynman, has a quote that has been a continual source of inspiration for me: “A method is more important than a discovery, since the right method will lead to new and even more important discoveries.”

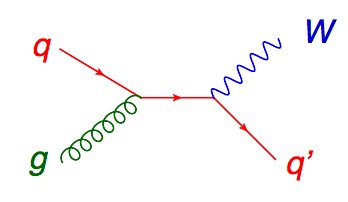

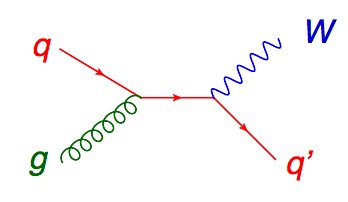

The work with Zvi and David, which has spanned two decades, is all about scattering amplitudes, which are the complex numbers that get squared in quantum mechanics to provide probabilities for incoming particles to scatter into outgoing ones. High energy physics is essentially the study of scattering amplitudes, especially those for particles moving very close to the speed of light. Two incoming particles at a high energy collider smash into each other, and a multitude of new, outgoing particles can be created from their relativistic energy. In perturbation theory, scattering amplitudes can be computed (in principle) by drawing all Feynman diagrams. The first order in perturbation theory is called tree level, because you draw all diagrams without any closed loops, which look roughly like trees. For example, one of the two tree-level Feynman diagrams for a quark and a gluon to scatter into a W boson (carrier of the weak force) and a quark is shown here.

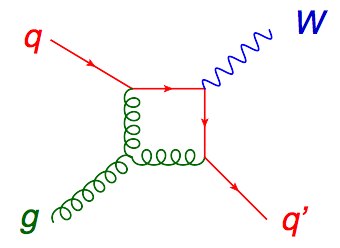

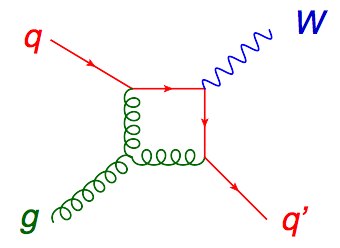

We write this process as qg → Wq. To get the next approximation (called NLO) you do the one loop corrections, all diagrams with one closed loop. One of the 11 diagrams for the same process is shown here.

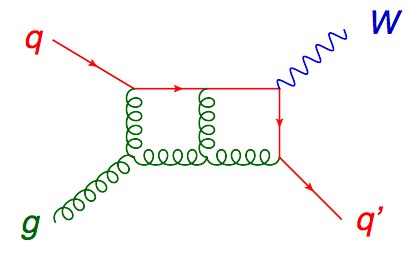

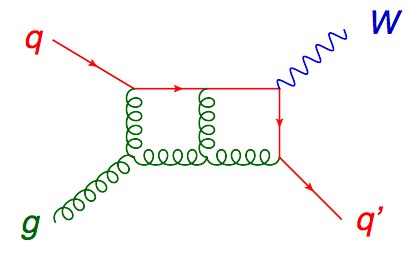

Then two loops (one diagram out of hundreds is shown here), and so on.

The forces underlying the Standard Model of particle physics are all described by gauge theories, also called Yang-Mills theories. The one that holds the quarks and gluons together inside the proton is a theory of “color” forces called quantum chromodynamics (QCD). The physics at the discovery machines called hadron colliders — the Tevatron and the LHC — is dominantly that of QCD. Feynman rules, which assign a formula to each Feynman diagram, have been known since Feynman’s work in the 1940s. The ones for QCD have been known since the 1960s. Still, computing scattering amplitudes in QCD has remained a formidable problem for theorists.

Back around 1990, the state of the art for scattering amplitudes in QCD was just one loop. It was also basically limited to “four-leg” processes, which means two particles in and two particles out. For example, gg → gg (two gluons in, two gluons out). This process (or reaction) gives two “jets” of high energy hadrons at the Tevatron or the LHC. It has a very high rate (probability of happening), and gives our most direct probe of the behavior of particles at very short distances.

Another reaction that was just being computed at one loop around 1990 was qg → Wq (one of whose Feynman diagrams you saw earlier). This is another copious process and therefore an important background at the LHC. But these two processes are just the tip of an enormous iceberg; experimentalists can easily find LHC events with six or more jets (http://arxiv.org/abs/arXiv:1107.2092, http://arxiv.org/abs/arXiv:1110.3226, http://arxiv.org/abs/arXiv:1304.7098), each one coming from a high energy quark or gluon. There are many other types of complex events that they worry about too.

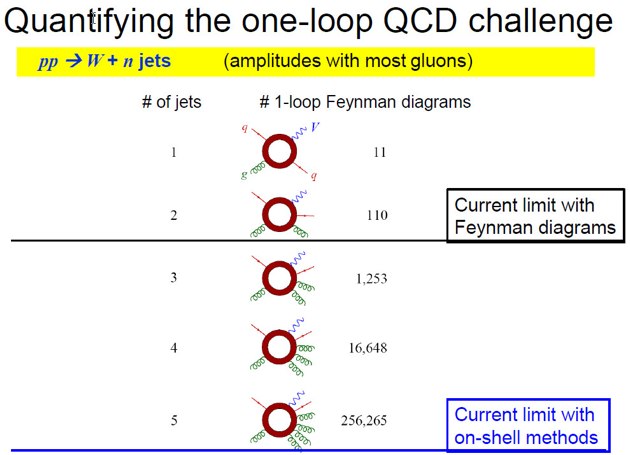

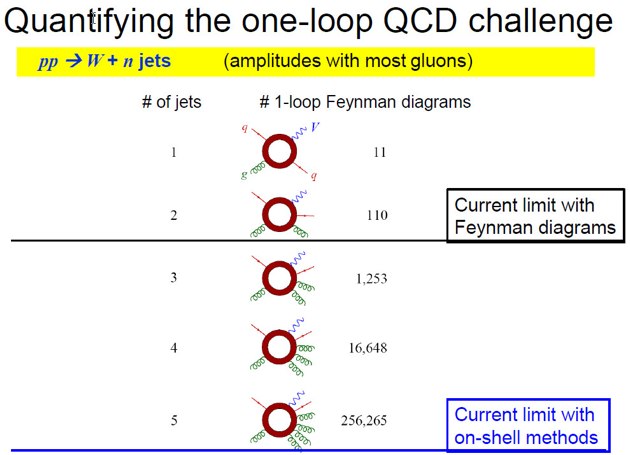

A big problem for theorists is that the number of Feynman diagrams grows rapidly with both the number of loops, and with the number of legs. In the case of the number of legs, for example, there are only 11 Feynman diagrams for qg → Wq. One diagram a day, and you are done in under two weeks; no problem. However, if you want to do instead the series of processes: qg → Wqg, qg → Wqgg, qg → Wqggg, qg → Wqgggg, you face 110, 1253, 16,648 and 256,265 Feynman diagrams. That could ruin your whole decade (or more). [See the figure; the ring-shaped blobs stand for the sum of all one-loop Feynman diagrams.]

It’s not just the raw number of diagrams. Many of the diagrams with large numbers of external particles are much, much messier than the 11 diagrams for qg → Wq. Plus the messy diagrams tend to be numerically unstable, causing problems when you try to get numbers out. This problem definitely calls out for a new method.

Why care about all these scattering amplitudes at all? …

Guest Post: Lance Dixon on Calculating AmplitudesRead More »

One of the chapters in Surely You’re Joking, Mr. Feynman is titled “Alfred Nobel’s Other Mistake.” The first being dynamite, of course, and the second being the Nobel Prize. When I first read it I was a little exasperated by Feynman’s kvetchy tone — sure, there must be a lot of nonsense associated with being named a Nobel Laureate, but it’s nevertheless a great honor, and more importantly the Prizes do a great service for science by highlighting truly good work.

One of the chapters in Surely You’re Joking, Mr. Feynman is titled “Alfred Nobel’s Other Mistake.” The first being dynamite, of course, and the second being the Nobel Prize. When I first read it I was a little exasperated by Feynman’s kvetchy tone — sure, there must be a lot of nonsense associated with being named a Nobel Laureate, but it’s nevertheless a great honor, and more importantly the Prizes do a great service for science by highlighting truly good work.