Why is the Universe So Damn Big?

I love reading io9, it’s such a fun mixture of science fiction, entertainment, and pure science. So I was happy to respond when their writer George Dvorsky emailed to ask an innocent-sounding question: “Why is the scale of the universe so freakishly large?”

You can find the fruits of George’s labors at this io9 post. But my own answer went on at sufficient length that I might as well put it up here as well. Of course, as with any “Why?” question, we need to keep in mind that the answer might simply be “Because that’s the way it is.”

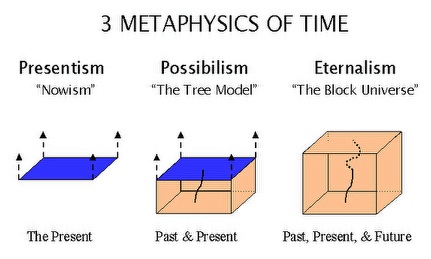

Whenever we seem surprised or confused about some aspect of the universe, it’s because we have some pre-existing expectation for what it “should” be like, or what a “natural” universe might be. But the universe doesn’t have a purpose, and there’s nothing more natural than Nature itself — so what we’re really trying to do is figure out what our expectations should be.

The universe is big on human scales, but that doesn’t mean very much. It’s not surprising that humans are small compared to the universe, but big compared to atoms. That feature does have an obvious anthropic explanation — complex structures can only form on in-between scales, not at the very largest or very smallest sizes. Given that living organisms are going to be complex, it’s no surprise that we find ourselves at an in-between size compared to the universe and compared to elementary particles.

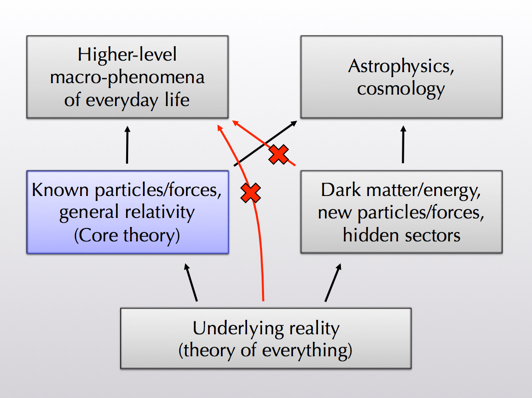

What is arguably more interesting is that the universe is so big compared to particle-physics scales. The Planck length, from quantum gravity, is 10^{-33} centimeters, and the size of an atom is roughly 10^{-8} centimeters. The difference between these two numbers is already puzzling — that’s related to the “hierarchy problem” of particle physics. (The size of atoms is fixed by the length scale set by electroweak interactions, while the Planck length is set by Newton’s constant; the two distances are extremely different, and we’re not sure why.) But the scale of the universe is roughly 10^29 centimeters across, which is enormous by any scale of microphysics. It’s perfectly reasonable to ask why.

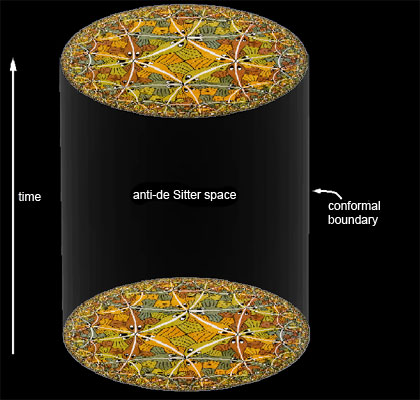

Part of the answer is that “typical” configurations of stuff, given the laws of physics as we know them, tend to be very close to empty space. (“Typical” means “high entropy” in this context.) That’s a feature of general relativity, which says that space is dynamical, and can expand and contract. So you give me any particular configuration of matter in space, and I can find a lot more configurations where the same collection of matter is spread out over a much larger volume of space. So if we were to “pick a random collection of stuff” obeying the laws of physics, it would be mostly empty space. Which our universe is, kind of.

Two big problems with that. First, even empty space has a natural length scale, which is set by the cosmological constant (energy of the vacuum). In 1998 we discovered that the cosmological constant is not quite zero, although it’s very small. The length scale that it sets (roughly, the distance over which the curvature of space due to the cosmological constant becomes appreciable) is indeed the size of the universe today — about 10^26 centimeters. (Note that the cosmological constant itself is inversely proportional to this length scale — so the question “Why is the cosmological-constant length scale so large?” is the same as “Why is the cosmological constant so small?”)

This raises two big questions. The first is the “coincidence problem”: the universe is expanding, but the length scale associated with the cosmological constant is a constant, so why are they approximately equal today? The second is simply the “cosmological constant problem”: why is the cosmological constant scale so enormously larger than the Planck scale, or event than the atomic scale? It’s safe to say that right now there are no widely-accepted answers to either of these questions.

So roughly: the answer to “Why is the universe so big?” is “Because the cosmological constant is so small.” And the answer to “Why is the cosmological constant so small?” is “Nobody knows.”

But there’s yet another wrinkle. Typical configurations of stuff tend to look like empty space. But our universe, while relatively empty, isn’t *that* empty. It has over a hundred billion galaxies, with a hundred billion stars each, and over 10^50 atoms per star. Worse, there are maybe 10^88 particles (mostly photons and neutrinos) within the observable universe. That’s a lot of particles! A much more natural state of the universe would be enormously emptier than that. Indeed, as space expands the density of particles dilutes away — we’re headed toward a much more natural state, which will be much emptier than the universe we see today.

So, given what we know about physics, the real question is “Why are there so many particles in the observable universe?” That’s one angle on the question “Why is the entropy of the observable universe so small?” And of course the density of particles was much higher, and the entropy much lower, at early times. These questions are also ones to which we have no good answers at the moment.

Why is the Universe So Damn Big? Read More »