Guest Post: Grant Remmen on Entropic Gravity

“Understanding quantum gravity” is on every physicist’s short list of Big Issues we would all like to know more about. If there’s been any lesson from last half-century of serious work on this problem, it’s that the answer is likely to be something more subtle than just “take classical general relativity and quantize it.” Quantum gravity doesn’t seem to be an ordinary quantum field theory.

“Understanding quantum gravity” is on every physicist’s short list of Big Issues we would all like to know more about. If there’s been any lesson from last half-century of serious work on this problem, it’s that the answer is likely to be something more subtle than just “take classical general relativity and quantize it.” Quantum gravity doesn’t seem to be an ordinary quantum field theory.

In that context, it makes sense to take many different approaches and see what shakes out. Alongside old stand-bys such as string theory and loop quantum gravity, there are less head-on approaches that try to understand how quantum gravity can really be so weird, without proposing a specific and complete model of what it might be.

Grant Remmen, a graduate student here at Caltech, has been working with me recently on one such approach, dubbed entropic gravity. We just submitted a paper entitled “What Is the Entropy in Entropic Gravity?” Grant was kind enough to write up this guest blog post to explain what we’re talking about.

Meanwhile, if you’re near Pasadena, Grant and his brother Cole have written a musical, Boldly Go!, which will be performed at Caltech in a few weeks. You won’t want to miss it!

One of the most exciting developments in theoretical physics in the past few years is the growing understanding of the connections between gravity, thermodynamics, and quantum entanglement. Famously, a complete quantum mechanical theory of gravitation is difficult to construct. However, one of the aspects that we are now coming to understand about quantum gravity is that in the final theory, gravitation and even spacetime itself will be closely related to, and maybe even emergent from, the mysterious quantum mechanical property known as entanglement.

This all started several decades ago, when Hawking and others realized that black holes behave with many of the same aspects as garden-variety thermodynamic systems, including temperature, entropy, etc. Most importantly, the black hole’s entropy is equal to its area [divided by (4 times Newton’s constant)]. Attempts to understand the origin of black hole entropy, along with key developments in string theory, led to the formulation of the holographic principle – see, for example, the celebrated AdS/CFT correspondence – in which quantum gravitational physics in some spacetime is found to be completely described by some special non-gravitational physics on the boundary of the spacetime. In a nutshell, one gets a gravitational universe as a “hologram” of a non-gravitational universe.

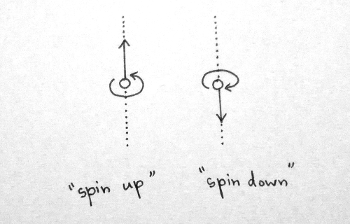

If gravity can emerge from, or be equivalent to, a set of physical laws without gravity, then something special about that non-gravitational physics has to make it happen. Physicists have now found that that special something is quantum entanglement: the special correlations among quantum mechanical particles that defies classical description. As a result, physicists are very interested in how to get the dynamics describing how spacetime is shaped and moves – Einstein’s equation of general relativity – from various properties of entanglement. In particular, it’s been suggested that the equations of gravity can be shown to come from some notion of entropy. As our universe is quantum mechanical, we should think about the entanglement entropy, a measure of the degree of correlation of quantum subsystems, which for thermal states matches the familiar thermodynamic notion of entropy.

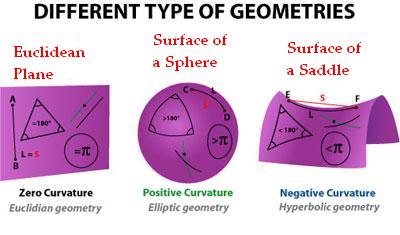

The general idea is as follows: Inspired by black hole thermodynamics, suppose that there’s some more general notion, in which you choose some region of spacetime, compute its area, and find that when its area changes this is associated with a change in entropy. (I’ve been vague here as to what is meant by a “change” in the area and what system we’re computing the area of – this will be clarified soon!) Next, you somehow relate the entropy to an energy (e.g., using thermodynamic relations). Finally, you write the change in area in terms of a change in the spacetime curvature, using differential geometry. Putting all the pieces together, you get a relation between an energy and the curvature of spacetime, which if everything goes well, gives you nothing more or less than Einstein’s equation! This program can be broadly described as entropic gravity and the idea has appeared in numerous forms. With the plethora of entropic gravity theories out there, we realized that there was a need to investigate what categories they fall into and whether their assumptions are justified – this is what we’ve done in our recent work.

In particular, there are two types of theories in which gravity is related to (entanglement) entropy, which we’ve called holographic gravity and thermodynamic gravity in our paper. The difference between the two is in what system you’re considering, how you define the area, and what you mean by a change in that area.

In holographic gravity, you consider a region and define the area as that of its boundary, then consider various alternate configurations and histories of the matter in that region to see how the area would be different. Recent work in AdS/CFT, in which Einstein’s equation at linear order is equivalent to something called the “entanglement first law”, falls into the holographic gravity category. This idea has been extended to apply outside of AdS/CFT by Jacobson (2015). Crucially, Jacobson’s idea is to apply holographic mathematical technology to arbitrary quantum field theories in the bulk of spacetime (rather than specializing to conformal field theories – special physical models – on the boundary as in AdS/CFT) and thereby derive Einstein’s equation. However, in this work, Jacobson needed to make various assumptions about the entanglement structure of quantum field theories. In our paper, we showed how to justify many of those assumptions, applying recent results derived in quantum field theory (for experts, the form of the modular Hamiltonian and vacuum-subtracted entanglement entropy on null surfaces for general quantum field theories). Thus, we are able to show that the holographic gravity approach actually seems to work!

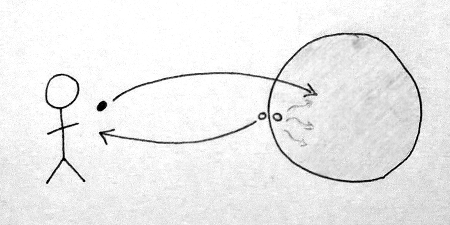

On the other hand, thermodynamic gravity is of a different character. Though it appears in various forms in the literature, we focus on the famous work of Jacobson (1995). In thermodynamic gravity, you don’t consider changing the entire spacetime configuration. Instead, you imagine a bundle of light rays – a lightsheet – in a particular dynamical spacetime background. As the light rays travel along – as you move down the lightsheet – the rays can be focused by curvature of the spacetime. Now, if the bundle of light rays started with a particular cross-sectional area, you’ll find a different area later on. In thermodynamic gravity, this is the change in area that goes into the derivation of Einstein’s equation. Next, one assumes that this change in area is equivalent to an entropy – in the usual black hole way with a factor of 1/(4 times Newton’s constant) – and that this entropy can be interpreted thermodynamically in terms of an energy flow through the lightsheet. The entropy vanishes from the derivation and the Einstein equation almost immediately appears as a thermodynamic equation of state. What we realized, however, is that what the entropy is actually the entropy of was ambiguous in thermodynamic gravity. Surprisingly, we found that there doesn’t seem to be a consistent definition of the entropy in thermodynamic gravity – applying quantum field theory results for the energy and entanglement entropy, we found that thermodynamic gravity could not simultaneously reproduce the correct constant in the Einstein equation and in the entropy/area relation for black holes.

So when all is said and done, we’ve found that holographic gravity, but not thermodynamic gravity, is on the right track. To answer our own question in the title of the paper, we found – in admittedly somewhat technical language – that the vacuum-subtracted von Neumann entropy evaluated on the null boundary of small causal diamonds gives a consistent formulation of holographic gravity. The future looks exciting for finding the connections between gravity and entanglement!

Guest Post: Grant Remmen on Entropic Gravity Read More »