Over at the twitter dot com website, there has been a briefly-trending topic #fav7films, discussing your favorite seven films. Part of the purpose of being on twitter is to one-up the competition, so I instead listed my #fav7equations. Slightly cleaned up, the equations I chose as my seven favorites are:

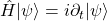

In order: Newton’s Second Law of motion, the Euler-Lagrange equation, Maxwell’s equations in terms of differential forms, Boltzmann’s definition of entropy, the metric for Minkowski spacetime (special relativity), Einstein’s equation for spacetime curvature (general relativity), and the Schrödinger equation of quantum mechanics. Feel free to Google them for more info, even if equations aren’t your thing. They represent a series of extraordinary insights in the development of physics, from the 1600’s to the present day.

Of course people chimed in with their own favorites, which is all in the spirit of the thing. But one misconception came up that is probably worth correcting: people don’t appreciate how important and all-encompassing the Schrödinger equation is.

I blame society. Or, more accurately, I blame how we teach quantum mechanics. Not that the standard treatment of the Schrödinger equation is fundamentally wrong (as other aspects of how we teach QM are), but that it’s incomplete. And sometimes people get brief introductions to things like the Dirac equation or the Klein-Gordon equation, and come away with the impression that they are somehow relativistic replacements for the Schrödinger equation, which they certainly are not. Dirac et al. may have originally wondered whether they were, but these days we certainly know better.

As I remarked in my post about emergent space, we human beings tend to do quantum mechanics by starting with some classical model, and then “quantizing” it. Nature doesn’t work that way, but we’re not as smart as Nature is. By a “classical model” we mean something that obeys the basic Newtonian paradigm: there is some kind of generalized “position” variable, and also a corresponding “momentum” variable (how fast the position variable is changing), which together obey some deterministic equations of motion that can be solved once we are given initial data. Those equations can be derived from a function called the Hamiltonian, which is basically the energy of the system as a function of positions and momenta; the results are Hamilton’s equations, which are essentially a slick version of Newton’s original  .

.

There are various ways of taking such a setup and “quantizing” it, but one way is to take the position variable and consider all possible (normalized, complex-valued) functions of that variable. So instead of, for example, a single position coordinate x and its momentum p, quantum mechanics deals with wave functions ψ(x). That’s the thing that you square to get the probability of observing the system to be at the position x. (We can also transform the wave function to “momentum space,” and calculate the probabilities of observing the system to be at momentum p.) Just as positions and momenta obey Hamilton’s equations, the wave function obeys the Schrödinger equation,

.

.

Indeed, the  that appears in the Schrödinger equation is just the quantum version of the Hamiltonian.

that appears in the Schrödinger equation is just the quantum version of the Hamiltonian.

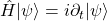

The problem is that, when we are first taught about the Schrödinger equation, it is usually in the context of a specific, very simple model: a single non-relativistic particle moving in a potential. In other words, we choose a particular kind of wave function, and a particular Hamiltonian. The corresponding version of the Schrödinger equation is

![Rendered by QuickLaTeX.com \displaystyle{\left[-\frac{1}{\mu^2}\frac{\partial^2}{\partial x^2} + V(x)\right]|\psi\rangle = i\partial_t |\psi\rangle}](https://www.preposterousuniverse.com/blog/wp-content/ql-cache/quicklatex.com-8c8f3a07d146073723554d27cf630fe0_l3.png) .

.

If you don’t dig much deeper into the essence of quantum mechanics, you could come away with the impression that this is “the” Schrödinger equation, rather than just “the non-relativistic Schrödinger equation for a single particle.” Which would be a shame.

What happens if we go beyond the world of non-relativistic quantum mechanics? Is the poor little Schrödinger equation still up to the task? Sure! All you need is the right set of wave functions and the right Hamiltonian. Every quantum system obeys a version of the Schrödinger equation; it’s completely general. In particular, there’s no problem talking about relativistic systems or field theories — just don’t use the non-relativistic version of the equation, obviously.

What about the Klein-Gordon and Dirac equations? These were, indeed, originally developed as “relativistic versions of the non-relativistic Schrödinger equation,” but that’s not what they ended up being useful for. (The story is told that Schrödinger himself invented the Klein-Gordon equation even before his non-relativistic version, but discarded it because it didn’t do the job for describing the hydrogen atom. As my old professor Sidney Coleman put it, “Schrödinger was no dummy. He knew about relativity.”)

The Klein-Gordon and Dirac equations are actually not quantum at all — they are classical field equations, just like Maxwell’s equations are for electromagnetism and Einstein’s equation is for the metric tensor of gravity. They aren’t usually taught that way, in part because (unlike E&M and gravity) there aren’t any macroscopic classical fields in Nature that obey those equations. The KG equation governs relativistic scalar fields like the Higgs boson, while the Dirac equation governs spinor fields (spin-1/2 fermions) like the electron and neutrinos and quarks. In Nature, spinor fields are a little subtle, because they are anticommuting Grassmann variables rather than ordinary functions. But make no mistake; the Dirac equation fits perfectly comfortably into the standard Newtonian physical paradigm.

For fields like this, the role of “position” that for a single particle was played by the variable x is now played by an entire configuration of the field throughout space. For a scalar Klein-Gordon field, for example, that might be the values of the field φ(x) at every spatial location x. But otherwise the same story goes through as before. We construct a wave function by attaching a complex number to every possible value of the position variable; to emphasize that it’s a function of functions, we sometimes call it a “wave functional” and write it as a capital letter,

![Rendered by QuickLaTeX.com \Psi[\phi(x)]](https://www.preposterousuniverse.com/blog/wp-content/ql-cache/quicklatex.com-90ee4f0f7cd2163bcb24320a52fadee9_l3.png) .

.

The absolute-value-squared of this wave functional tells you the probability that you will observe the field to have the value φ(x) at each point x in space. The functional obeys — you guessed it — a version of the Schrödinger equation, with the Hamiltonian being that of a relativistic scalar field. There are likewise versions of the Schrödinger equation for the electromagnetic field, for Dirac fields, for the whole Core Theory, and what have you.

So the Schrödinger equation is not simply a relic of the early days of quantum mechanics, when we didn’t know how to deal with much more than non-relativistic particles orbiting atomic nuclei. It is the foundational equation of quantum dynamics, and applies to every quantum system there is. (There are equivalent ways of doing quantum mechanics, of course, like the Heisenberg picture and the path-integral formulation, but they’re all basically equivalent.) You tell me what the quantum state of your system is, and what is its Hamiltonian, and I will plug into the Schrödinger equation to see how that state will evolve with time. And as far as we know, quantum mechanics is how the universe works. Which makes the Schrödinger equation arguably the most important equation in all of physics.

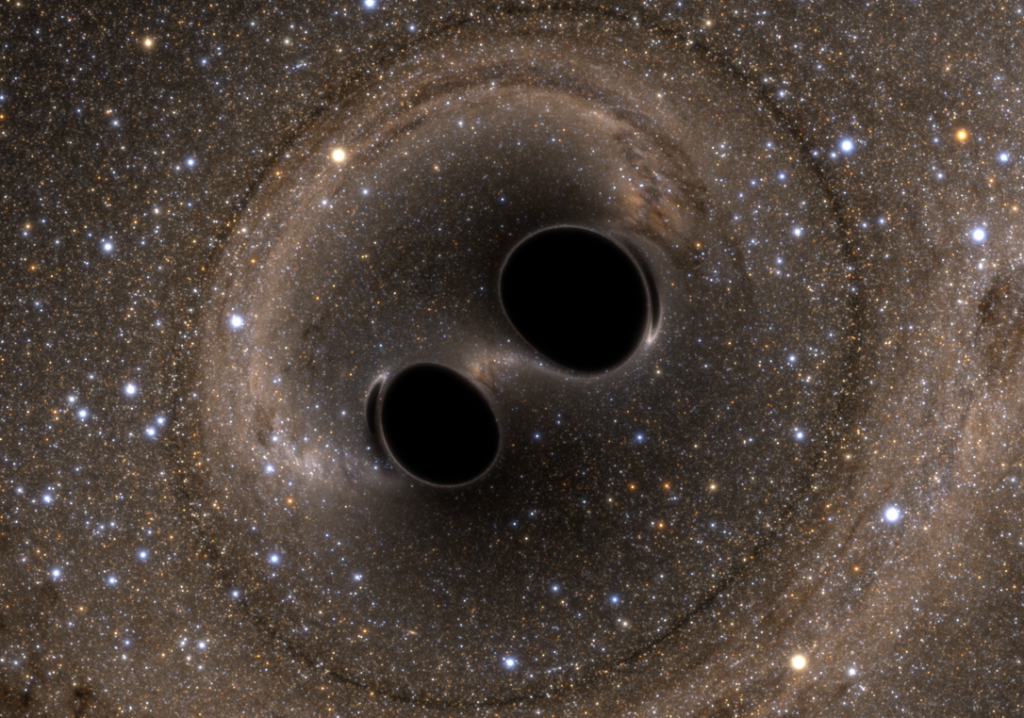

While we’re at it, people complained that the cosmological constant Λ didn’t appear in Einstein’s equation (6). Of course it does — it’s part of the energy-momentum tensor on the right-hand side. Again, Einstein didn’t necessarily think of it that way, but these days we know better. The whole thing that is great about physics is that we keep learning things; we don’t need to remain stuck with the first ideas that were handed down by the great minds of the past.