Peter Coles has issued a challenge: explain why dark energy makes the universe accelerate in terms that are understandable to non-scientists. This is a pet peeve of mine — any number of fellow cosmologists will recall me haranguing them about it over coffee at conferences — but I’m not sure I’ve ever blogged about it directly, so here goes. In three parts: the wrong way, the right way, and the math.

The Wrong Way

Ordinary matter acts to slow down the expansion of the universe. That makes intuitive sense, because the matter is exerting a gravitational force, acting to pull things together. So why does dark energy seem to push things apart?

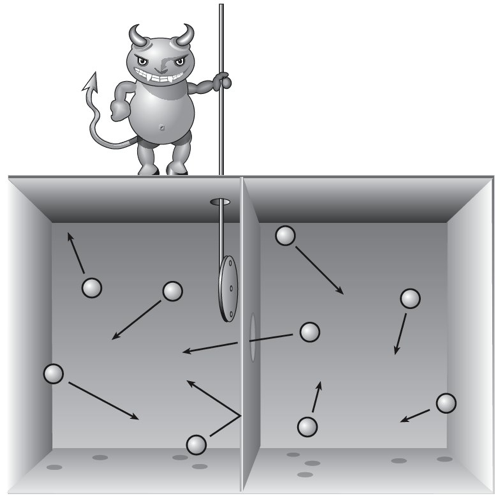

The usual (wrong) way to explain this is to point out that dark energy has “negative pressure.” The kind of pressure we are most familiar with, in a balloon or an inflated tire, pushing out on the membrane enclosing it. But negative pressure — tension — is more like a stretched string or rubber band, pulling in rather than pushing out. And dark energy has negative pressure, so that makes the universe accelerate.

If the kindly cosmologist is both lazy and fortunate, that little bit of word salad will suffice. But it makes no sense at all, as Peter points out. Why do we go through all the conceptual effort of explaining that negative pressure corresponds to a pull, and then quickly mumble that this accounts for why galaxies are pushed apart?

So the slightly more careful cosmologist has to explain that the direct action of this negative pressure is completely impotent, because it’s equal in all directions and cancels out. (That’s a bit of a lie as well, of course; it’s really because you don’t interact directly with the dark energy, so you don’t feel pressure of any sort, but admitting that runs the risk of making it all seem even more confusing.) What matters, according to this line of fast talk, is the gravitational effect of the negative pressure. And in Einstein’s general relativity, unlike Newtonian gravity, both the pressure and the energy contribute to the force of gravity. The negative pressure associated with dark energy is so large that it overcomes the positive (attractive) impulse of the energy itself, so the net effect is a push rather than a pull.

This explanation isn’t wrong; it does track the actual equations. But it’s not the slightest bit of help in bringing people to any real understanding. It simply replaces one question (why does dark energy cause acceleration?) with two facts that need to be taken on faith (dark energy has negative pressure, and gravity is sourced by a sum of energy and pressure). The listener goes away with, at best, the impression that something profound has just happened rather than any actual understanding.

The Right Way

The right way is to not mention pressure at all, positive or negative. For cosmological dynamics, the relevant fact about dark energy isn’t its pressure, it’s that it’s persistent. It doesn’t dilute away as the universe expands. And this is even a fact that can be explained, by saying that dark energy isn’t a collection of particles growing less dense as space expands, but instead is (according to our simplest and best models) a feature of space itself. The amount of dark energy is constant throughout both space and time: about one hundred-millionth of an erg per cubic centimeter. It doesn’t dilute away, even as space expands.

Given that, all you need to accept is that Einstein’s formulation of gravity says “the curvature of spacetime is proportional to the amount of stuff within it.” (The technical version of “curvature of spacetime” is the Einstein tensor, and the technical version of “stuff” is the energy-momentum tensor.) In the case of an expanding universe, the manifestation of spacetime curvature is simply the fact that space is expanding. (There can also be spatial curvature, but that seems negligible in the real world, so why complicate things.)

So: the density of dark energy is constant, which means the curvature of spacetime is constant, which means that the universe expands at a fixed rate.

The tricky part is explaining why “expanding at a fixed rate” means “accelerating.” But this is a subtlety worth clarifying, as it helps distinguish between the expansion of the universe and the speed of a physical object like a moving car, and perhaps will help someone down the road not get confused about the universe “expanding faster than light.” (A confusion which many trained cosmologists who really should know better continue to fall into.)

The point is that the expansion rate of the universe is not a speed. It’s a timescale — the time it takes the universe to double in size (or expand by one percent, or whatever, depending on your conventions). It couldn’t possibly be a speed, because the apparent velocity of distant galaxies is not a constant number, it’s proportional to their distance. When we say “the expansion rate of the universe is a constant,” we mean it takes a fixed amount of time for the universe to double in size. So if we look at any one particular galaxy, in roughly ten billion years it will be twice as far away; in twenty billion years (twice that time) it will be four times as far away; in thirty billion years it will be eight times that far away, and so on. It’s accelerating away from us, exponentially. “Constant expansion rate” implies “accelerated motion away from us” for individual objects.

There’s absolutely no reason why a non-scientist shouldn’t be able to follow why dark energy makes the universe accelerate, given just a bit of willingness to think about it. Dark energy is persistent, which imparts a constant impulse to the expansion of the universe, which makes galaxies accelerate away. No negative pressures, no double-talk.

The Math

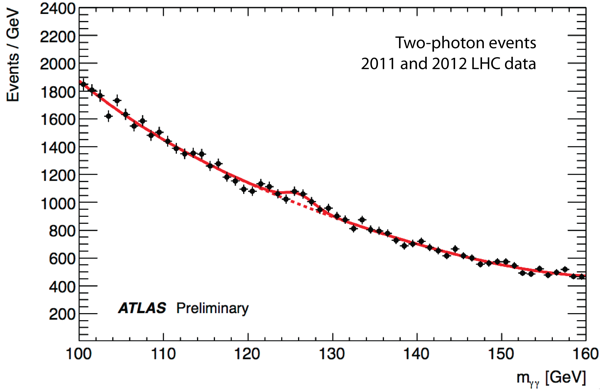

So why are people tempted to talk about negative pressure? As Peter says, there is an equation for the second derivative (roughly, the acceleration) of the universe, which looks like this:

(I use a for the scale factor rather than R, and sensibly set c=1.) Here, ρ is the energy density and p is the pressure. To get acceleration, you want the second derivative to be positive, and there’s a minus sign outside the right-hand side, so we want (ρ + 3p) to be negative. The data say the dark energy density is positive, so a negative pressure is just the trick.

But, while that’s a perfectly good equation — the “second Friedmann equation” — it’s not the one anyone actually uses to solve for the evolution of the universe. It’s much nicer to use the first Friedmann equation, which involves the first derivative of the scale factor rather than its second derivative (spatial curvature set to zero for convenience):

Here H is the Hubble parameter, which is what we mean when we say “the expansion rate.” You notice a couple of nice things about this equation. First, the pressure doesn’t appear. The expansion rate is simply driven by the energy density ρ. It’s completely consistent with the first equation, as they are related to each other by an equation that encodes energy-momentum conservation, and the pressure does make an appearance there. Second, a constant energy density straightforwardly implies a constant expansion rate H. So no problem at all: a persistent source of energy causes the universe to accelerate.

Banning “negative pressure” from popular expositions of cosmology would be a great step forward. It’s a legitimate scientific concept, but is more often employed to give the illusion of understanding rather than any actual insight.

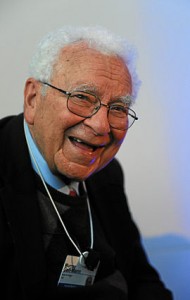

Meanwhile here at Caltech, we welcomed back favorite son Murray Gell-Mann (who spends his days at the Santa Fe Institute these days) for the 50th anniversary of quarks. One of the speakers, Geoffrey West, pointed out that no Nobel was awarded for the idea of quarks. Gell-Mann did of course win the Nobel in 1969, but that was “for his contributions and discoveries concerning the classification of elementary particles and their interactions”. In other words, strangeness, SU(3) flavor symmetry, the Eightfold Way, and the prediction of the Omega-minus particle. (Other things Gell-Mann helped invent: kaon mixing, the renormalization group, the sigma model for pions, color and quantum chromodynamics, the seesaw mechanism for neutrino masses, and the decoherent histories approach to quantum mechanics. He is kind of a big deal.)

Meanwhile here at Caltech, we welcomed back favorite son Murray Gell-Mann (who spends his days at the Santa Fe Institute these days) for the 50th anniversary of quarks. One of the speakers, Geoffrey West, pointed out that no Nobel was awarded for the idea of quarks. Gell-Mann did of course win the Nobel in 1969, but that was “for his contributions and discoveries concerning the classification of elementary particles and their interactions”. In other words, strangeness, SU(3) flavor symmetry, the Eightfold Way, and the prediction of the Omega-minus particle. (Other things Gell-Mann helped invent: kaon mixing, the renormalization group, the sigma model for pions, color and quantum chromodynamics, the seesaw mechanism for neutrino masses, and the decoherent histories approach to quantum mechanics. He is kind of a big deal.)

One of the chapters in Surely You’re Joking, Mr. Feynman is titled “Alfred Nobel’s Other Mistake.” The first being dynamite, of course, and the second being the Nobel Prize. When I first read it I was a little exasperated by Feynman’s kvetchy tone — sure, there must be a lot of nonsense associated with being named a Nobel Laureate, but it’s nevertheless a great honor, and more importantly the Prizes do a great service for science by highlighting truly good work.

One of the chapters in Surely You’re Joking, Mr. Feynman is titled “Alfred Nobel’s Other Mistake.” The first being dynamite, of course, and the second being the Nobel Prize. When I first read it I was a little exasperated by Feynman’s kvetchy tone — sure, there must be a lot of nonsense associated with being named a Nobel Laureate, but it’s nevertheless a great honor, and more importantly the Prizes do a great service for science by highlighting truly good work.