Quantum Sleeping Beauty and the Multiverse

Hidden in my papers with Chip Sebens on Everettian quantum mechanics is a simple solution to a fun philosophical problem with potential implications for cosmology: the quantum version of the Sleeping Beauty Problem. It’s a classic example of self-locating uncertainty: knowing everything there is to know about the universe except where you are in it. (Skeptic’s Play beat me to the punch here, but here’s my own take.)

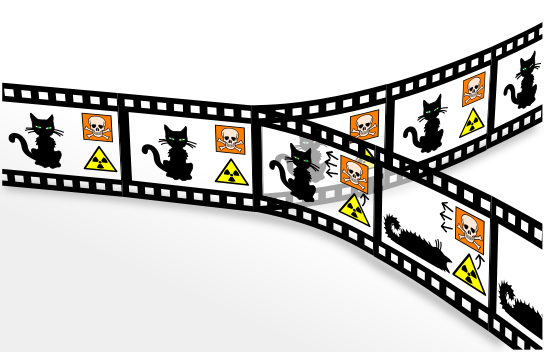

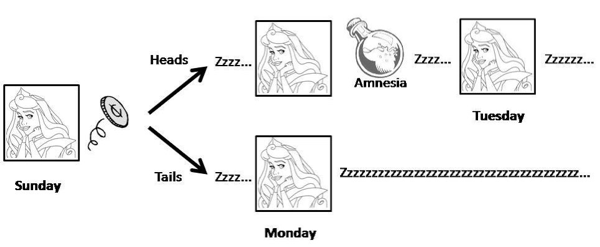

The setup for the traditional (non-quantum) problem is the following. Some experimental philosophers enlist the help of a subject, Sleeping Beauty. She will be put to sleep, and a coin is flipped. If it comes up heads, Beauty will be awoken on Monday and interviewed; then she will (voluntarily) have all her memories of being awakened wiped out, and be put to sleep again. Then she will be awakened again on Tuesday, and interviewed once again. If the coin came up tails, on the other hand, Beauty will only be awakened on Monday. Beauty herself is fully aware ahead of time of what the experimental protocol will be.

So in one possible world (heads) Beauty is awakened twice, in identical circumstances; in the other possible world (tails) she is only awakened once. Each time she is asked a question: “What is the probability you would assign that the coin came up tails?”

(Some other discussions switch the roles of heads and tails from my example.)

The Sleeping Beauty puzzle is still quite controversial. There are two answers one could imagine reasonably defending.

- “Halfer” — Before going to sleep, Beauty would have said that the probability of the coin coming up heads or tails would be one-half each. Beauty learns nothing upon waking up. She should assign a probability one-half to it having been tails.

- “Thirder” — If Beauty were told upon waking that the coin had come up heads, she would assign equal credence to it being Monday or Tuesday. But if she were told it was Monday, she would assign equal credence to the coin being heads or tails. The only consistent apportionment of credences is to assign 1/3 to each possibility, treating each possible waking-up event on an equal footing.

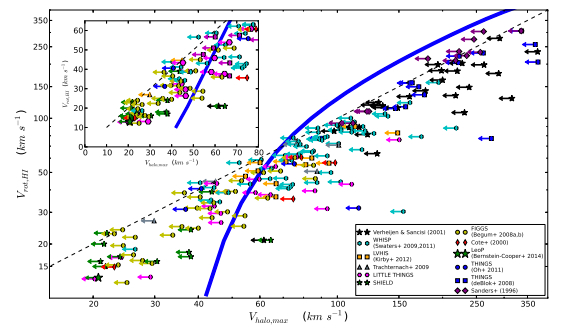

The Sleeping Beauty puzzle has generated considerable interest. It’s exactly the kind of wacky thought experiment that philosophers just eat up. But it has also attracted attention from cosmologists of late, because of the measure problem in cosmology. In a multiverse, there are many classical spacetimes (analogous to the coin toss) and many observers in each spacetime (analogous to being awakened on multiple occasions). Really the SB puzzle is a test-bed for cases of “mixed” uncertainties from different sources.

Chip and I argue that if we adopt Everettian quantum mechanics (EQM) and our Epistemic Separability Principle (ESP), everything becomes crystal clear. A rare case where the quantum-mechanical version of a problem is actually easier than the classical version. …

Quantum Sleeping Beauty and the Multiverse Read More »