One of a series of quick posts on the six sections of my book The Big Picture — Cosmos, Understanding, Essence, Complexity, Thinking, Caring.

Chapters in Part Five, Thinking:

- 37. Crawling Into Consciousness

- 38. The Babbling Brain

- 39. What Thinks?

- 40. The Hard Problem

- 41. Zombies and Stories

- 42. Are Photons Conscious?

- 43. What Acts on What?

- 44. Freedom to Choose

Even many people who willingly describe themselves as naturalists — who agree that there is only the natural world, obeying laws of physics — are brought up short by the nature of consciousness, or the mind-body problem. David Chalmers famously distinguished between the “Easy Problems” of consciousness, which include functional and operational questions like “How does seeing an object relate to our mental image of that object?”, and the “Hard Problem.” The Hard Problem is the nature of qualia, the subjective experiences associated with conscious events. “Seeing red” is part of the Easy Problem, “experiencing the redness of red” is part of the Hard Problem. No matter how well we might someday understand the connectivity of neurons or the laws of physics governing the particles and forces of which our brains are made, how can collections of such cells or particles ever be said to have an experience of “what it is like” to feel something?

These questions have been debated to death, and I don’t have anything especially novel to contribute to discussions of how the brain works. What I can do is suggest that (1) the emergence of concepts like “thinking” and “experiencing” and “consciousness” as useful ways of talking about macroscopic collections of matter should be no more surprising than the emergence of concepts like “temperature” and “pressure”; and (2) our understanding of those underlying laws of physics is so incredibly solid and well-established that there should be an enormous presumption against modifying them in some important way just to account for a phenomenon (consciousness) which is admittedly one of the most subtle and complex things we’ve ever encountered in the world.

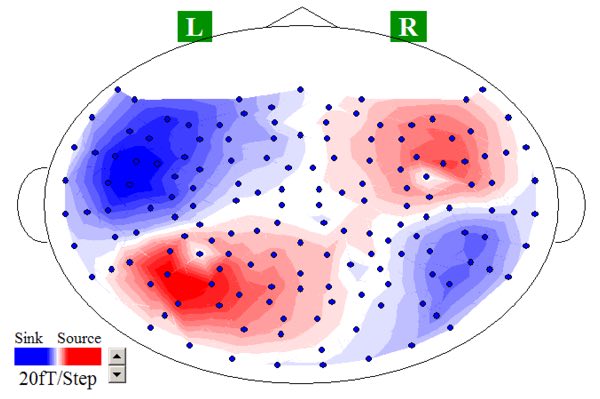

My suspicion is that the Hard Problem won’t be “solved,” it will just gradually fade away as we understand more and more about how the brain actually does work. I love this image of the magnetic fields generated in my brain as neurons squirt out charged particles, evidence of thoughts careening around my gray matter. (Taken by an MEG machine in David Poeppel’s lab at NYU.) It’s not evidence of anything surprising — not even the most devoted mind-body dualist is reluctant to admit that things happen in the brain while you are thinking — but it’s a vivid illustration of how closely our mental processes are associated with the particles and forces of elementary physics.

The divide between those who doubt that physical concepts can account for subjective experience and those who are think it can is difficult to bridge precisely because of the word “subjective” — there are no external, measurable quantities we can point to that might help resolve the issue. In the book I highlight this gap by imagining a dialogue between someone who believes in the existence of distinct mental properties (M) and a poetic naturalist (P) who thinks that such properties are a way of talking about physical reality:

M: I grant you that, when I am feeling some particular sensation, it is inevitably accompanied by some particular thing happening in my brain — a “neural correlate of consciousness.” What I deny is that one of my subjective experiences simply is such an occurrence in my brain. There’s more to it than that. I also have a feeling of what it is like to have that experience.

P: What I’m suggesting is that the statement “I have a feeling…” is simply a way of talking about those signals appearing in your brain. There is one way of talking that speaks a vocabulary of neurons and synapses and so forth, and another way that speaks of people and their experiences. And there is a map between these ways: when the neurons do a certain thing, the person feels a certain way. And that’s all there is.

M: Except that it’s manifestly not all there is! Because if it were, I wouldn’t have any conscious experiences at all. Atoms don’t have experiences. You can give a functional explanation of what’s going on, which will correctly account for how I actually behave, but such an explanation will always leave out the subjective aspect.

P: Why? I’m not “leaving out” the subjective aspect, I’m suggesting that all of this talk of our inner experiences is a very useful way of bundling up the collective behavior of a complex collection of atoms. Individual atoms don’t have experiences, but macroscopic agglomerations of them might very well, without invoking any additional ingredients.

M: No they won’t. No matter how many non-feeling atoms you pile together, they will never start having experiences.

P: Yes they will.

M: No they won’t.

P: Yes they will.

I imagine that close analogues of this conversation have happened countless times, and are likely to continue for a while into the future.

I mean it would be pretty simple.

1) Define what makes a question incoherent or what incoherent even means in general for you. I’ve been assuming you think an idea is incoherent leads to the principle of explosion but as you’ve thrown it around in the Chinese Room example I no longer believe that.

2) Deny that I have an icon for red or any other sensation. Rather, convince I’m a zombie devoid of sensation. As I’m taking this to be the most naive definition of “qualia.”

3) Provide an explanation for my internal icons. What makes my icon for blue be assigned to blue?

I understand how I have icons that distinguish red from blue, you can more than explain that, but you haven’t explained to me how these icons can take on the form they can. In the same sense I can’t experience quarks, I can’t experience your blue as you perceive it. I’m not going to deny that you have a blue sensation though simply because it’s inaccessible to me. At the very least I know my blue exists, which is more than I have to say about quarks.

I actually don’t necessarily buy matter is made of quarks. I think I should assume matter is made of quarks because doing so has high predictive power, higher than any other alternative, but I don’t expect any future iteration of the theory to necessarily preserve our current picture of particle physics. Perhaps in limiting cases the current model will be a useful picture of reality but in general the composition of reality might have a completely different landscape. Quarks might be emergent in such a picture under the right conditions but in general not the material of reality (or quantum fields in general). As of now quarks and the core theory are part of a very useful mythology but it’s just as likely for me to experience quarks directly as any figure of mythology. What quarks have is predictive power though, so I will treat them as the building blocks of atoms, but I’d be crazy to believe that’s the “truth.”

Ultimately as a scientist though I don’t care about the truth, that’s not what science is about. Science is about making predictions within a given model framework. Whether that model is “true” in any ontological sense is immaterial. As long as it agrees with my sensations and can handle perturbations of my sensations/perceptions of the world and still be predictive then it has done its job. If it doesn’t, then you devise a new model. Science can’t find truth though and I pity anybody who believes it does. Mind you I don’t have a faith or believe in anything supernatural either. I’m truly agnostic in that sense. I just accept that we can never really “know” and that we don’t need to. However on the subject of qualia it’s the one thing I do know more than anything else as it’s the only thing I have direct access to. Atoms and matter and energy and etc are all convenient interpretations/organizations of my sense data.

With regards to your last question, a complete descriptive account of of neuroscience would be on par with quarks even if it didn’t explain qualia. I’ve said multiple times it’s an acceptable model in terms of prediction. But there’s more than what can be tested and admitting so is not incoherent even if devising such a model might literally be impossible. It just means we can’t usefully predict it, which is fine so don’t include in your science. I do not believe my science and the ontology of my philosophy should have a one to one relationship though. Which is the whole point I was getting at with my first comment.

A simpler claim for my belief: “the set of all reproducibly measurable features of the universe are not in one to one correspondence with the set of all real features of the universe.” I argue the first set is strictly smaller. I can’t define the elements in the subtraction of these sets as I can’t test them by construction, but that doesn’t mean I’m justified in saying their difference is the empty set.

Daniel

I would only if I would insist it would also be conscious if I did all the computations myself on pen and paper. If you think the machine is conscious then so is my hand and pen. You can argue I’m the conscious one and not my hand or the pen or the paper, well yeah, but then we’re back to the original Chinese room. So assuming you accept I gain no extra consciousness from doing this myself with pen and paper, how does a computer suddenly gain it? Use my analogy of the mouse in the room, what’s conscious in that case? The mouse certainly isn’t, not anymore than usual at least, it’s still just eating cheese. The program only has form when I interpret it. If I don’t know Chinese or rather have the mouse perform the same task but mix up all of the cards all of a sudden it’s not translating Chinese. Clearly it’s translating to its own variant of Chinese but we’d dismiss it as not knowing what it’s doing.

A program can be self-aware in the sense it’s self-monitoring, sure, but that’s not the same as consciousness. The mouse can be tricked into performing cheese eating tasks which manage the fidelity of the calculation, you just have to provide it with the right cards.

I’m not presupposing the conclusion, I’m extending my intuition on other forms of labor which I know can’t be conscious and I’m asking how an automated task suddenly elevates it above the other forms of labor.

Daniel

So close, and yet so far…

What does it mean for these representations to be “red,” “green,” or, “blue”?

You’ve correctly identified them as being essentially arbitrary — and, indeed, malleable. It’s like ASCII or EBCDIC; they’re two different symbolic representations, but ultimately map to the same inputs and outputs.

So, if we were to swap the internal representation of red and blue, and you’d eventually adapt and see the world correctly (analogous to the up / down swap you describe), at which point what used to be your “red” qualia is now your “blue” qualia and vice-versa, but you again see red as red and blue as blue despite the swap…

…shouldn’t that tell us that the “qualia” themselves are as irrelevant and arbitrary as whether the eyes themselves are blue or brown, and that the very notion of “qualia” is itself therefore meaningless?

Again, so close…

I wouldn’t go with the Windows analogy for all sorts of reasons.

But the fact is that you’re not seeing any radical changes because all the new radical stuff reduces down to the familiar at human scales. You can calculate the trajectory of a baseball with either Quantum or Relativistic Mechanics, and you’ll get the exact same answer as Newton — despite having gone to a lot more effort in the process. Calculate the orbit of Mercury to sufficient precision or the path of an electron through a small-enough slit, and you’ll get different answers…but how often do you actually encounter such environments? And the really exciting new physics is only applicable to environments even more exotic.

That’s the point Sean keeps trying to make with his wonderful observation that the physics of the everyday world are completely understood. There’s lots more stuff to discover, but none of it is about anything that’s going to change the lives of anybody except theoretical physicists.

Cheers,

b&

Daniel:

This is perfectly analogous to the caveman telling me I’m crazy for replying to his demands for the coordinates of the Sun’s nighttime cave by describing the revolution o the Earth. He’s not dizzy from all this spinning, and the Earth clearly isn’t moving.

So: your notion of zombies is as incoherent as your notion of qualia and is as incoherent as the caveman’s overnight solar abode. Your entire model and description of cognition needs to be scrapped, even if it’s as persuasive to you as the solid immovability of the Earth is to the caveman.

Am I making any progress with you yet?

b&

No you really should have addressed 1 first because I still don’t know what you mean by “incoherent.” It’s an icon you have in your mind which I can’t probe. I’m not in there with you, I have no idea what you mean by it. You need to explain it in terms of other words that I hope our icons agree on to in order to get me on board. “Unreasonable” won’t work either, it’s going to need a clear criteria of evaluation for me to get it.

Daniel:

Yes! Exactly!

It matters not the form of the computation. Any recursively self-analyzing computational model most, of necessity, be considered self-aware. How could it not?

To be fair, it’s not clear that you’d live long enough to manually perform a bit-level implementation of a Turing machine of such an algorithm, and, as such, one must ponder what it is that this implementation would be aware of, as well as what use such self-introspection might be for something you’re presumably describing as being otherwise disconnected from reality.

But the system you describe would at least have the self-aware consciousness part.

b&

Daniel, never mind incoherence.

The model you are describing for consciousness has no more bearing on reality than the caveman’s model of the Solar System. Indeed, I’m having as much trouble getting through to you as you would even trying to explain “Solar System” to the caveman.

That’s why I’m urging you to let go of all your misconceptions, and come at it as if you’ve never even pondered the problem in the first place. Don’t even assume that the Earth is the immovable foundation of all there is.

Start from scratch.

b&

I completely disagree. Imagine instead of just me I have a factory worth of people doing it by pen and paper and I have a system for organizing how iterations are divided up among the staff efficiently. With enough staff and paper this might even be achievable over several (human life) generations of computation. Now where is the consciousness? Is it in the paper? Is it in everybody’s minds divided evenly? Didn’t you reject the idea of a meta-consciousness arising from a group of people earlier in this discussion?

I’m surprised this is your position since it obviously requires something extra-physical to hold this consciousness. I agree it’s “self-aware,” but in the same sense that arithmetic can be used to encode Peano’s axioms and compute proofs of itself is “self-aware.” Additionally if I decide to scramble the cipher necessary to interpret what the task even is, then the machine just carries on despite there being no table to interpret it’s output. It doesn’t have the table, it doesn’t know how the elements of its computation correspond to an outside world. So even when provided the “right” table, as decided by our semantic conventions, I don’t see how it’s suddenly self-aware. I could have given it scrambled table in the first place. The syntactical task is the same no matter what the machine gets. It will never know what it’s computing.

If you want to argue that it’s a complexity thing, now you’re the one with the claim that many inorganic systems already existing are conscious. There are plenty of complex matrix algorithms that exceed the maximum possible complexity of the human brain’s actual neural network (if they could be run on a computer for large enough matrix size). If you think such algorithms conscious that’s quite a world you live in.

What a reversal of positions. Haha.

Daniel

This is an unsubstantiated claim. I clearly have an icon for color, you keep dancing around that fact. You can’t make that go away. You don’t have an answer for it, just accept it. That doesn’t mean it’s an ill-posed question. I mean this analogy could just as easily be turned. In fact you’re close to the caveman than I am since the cavemen’s flaw is his ontology is smaller than his instructor. I’m the one with a larger ontology here. You have the current theory of vision and I have the current theory of vision + qualia. The latter can’t be tested (as of now), but that is not at all the caveman’s reasoning for dismissing the extra ontology necessary to understand how the sun “sets.” He dismisses anything extra as “incoherent.”

In the same cave where the Sun goes at night.

I’m not.

I’ll try again, though I don’t know why.

To be aware of something is to have a model of it. To be aware of the self is to have a model of the self — of necessity a recursive function.

All this is superbly described by math, but the math is the map, not the territory. The self-awareness is in the actual execution of the algorithm. And, just as “temperature” is a useful construct even though it’s ultimately just the average kinetic energy of molecules, “self-awareness” is a useful construct even though it’s ultimately just bits (or their equivalent) flipping in whatever patterns.

Note: no qualia, no cave for the Sun, the Earth really does move, and you’re not a zombie (whatever that is) even though you lack qualia (whatever that is).

b&

And the caveman is just as certain and insistent that the Earth doesn’t move and that a moving Earth would regardless be irrelevant to directing him to the cave where the Sun sleeps at night, which is all he really cares about.

You really, truly, honestly don’t have an “icon for color,” whatever that’s supposed to be, any more than the Sun has a cave it sleeps in, no matter how certain you are of that supposed fact.

b&

I don’t think that’s an adequate definition of self awareness at all. I don’t have an accurate model of myself at all so I guess I’m not self aware. It’s quite a claim that self awareness comes from the execution of a self modeling algorithm. I don’t see any justification for that. You might as well call it qualia. I’m still amused you and I have switched positions here.

Of course I have an icon for red, it’s the form red takes when I perceive it. I’m more confident that I have a mental representation for red than I am confident other people have an inner mental life like I do. The latter is completely inferred but as of yet completely untested.

Daniel

Daniel:

Then what is self-awareness other than an awareness of the self? And what could awareness possibly be other than a model of something?

Woah — who wrote anything about accuracy? All models are, of necessity, imperfect.

But never mind that. Of course you have a (reasonably accurate) model of yourself. You’re thinking of a color right now, right? And that color is red, correct? How could you be aware that you’re thinking of red unless you’re aware that you’re thinking of red?

Would you consider it a similarly unreasonable claim that temperature comes from the random motions of a substance’s molecules?

When you’re done fully unpacking the second half of that sentence, you might come to understand that it doesn’t make any sense to begin with.

Where’s the temperature in a gas? What form does fifty degrees (“red”) take as compared with 500 degrees (“purple”)?

Notice how those questions scan as correct English but don’t actually make any sense — and, indeed, couldn’t possibly even begin to make sense unless one’s understanding of temperature is as confused as it might be for somebody stuck in the mindset of the calorific theory?

b&

The difference is that temperature isn’t a real thing. It’s a macroscopic quantity that conveniently bundles the collective behavior of large numbers of particles. It’s an indirect quantity but a useful one. Consciousness could be the same for all we know but that is counter to my experience. It is singular for me for all I know temperature has singular qualia as well.

I don’t have a model of myself at all, accurate or not. I have no idea how oxygen is distributed in my brain at any given time nor do I have a knowledge of most of my perception. I see only the most downstream output of vision, I am entirely unaware of anything upstream. The self identity I do have feels like it matches but I’m my own benchmark. Through external testing it’s clear my perception of myself in no way models what I actually am.

Daniel

At last — we might actually be making some progress! You’re at least starting to frame the discussion in some more coherent terms.

First, again, of course your perception of yourself is fuzzy and incomplete and inaccurate. Any psychologist or other mental health professional or even meditation guru would tell you as much.

Then again, your perception of the outside world is also fuzzy and incomplete and inaccurate…you might look at a ballpoint pen and pick it up and smell it and play with it, but how complete and accurate is your actual understanding of the pen? And how well does that understanding survive after an hour, a week, a decade? At any point could you provide a precise figure for the volume of the ink in the pen, even if it’s got a see-through tube?

But…if I say to you, “A penny for your thoughts,” you’ll have no trouble telling me what’s on your mind. How is that not a demonstration of self-awareness? Sure, it might not go to the level of the voltage in such-and-such a synapse in your left toe at that moment, but, again, so what?

So, if it really is true that your perception of yourself doesn’t comport with external models…then am I to believe that you aren’t slightly dehydrated when you feel thirsty, that you don’t feel pain when you stub your toe, that standing in line at the post office doesn’t annoy you?

And…if your thoughts about all those things don’t constitute a(n imperfect) model of the real-world phenomena they describe, including thoughts about thoughts about thoughts…when what on Earth else could they possibly be?

Cheers,

b&

I think “a penny for your thoughts” indicates self-awareness, but your definition does not. I think your post hints at the right ideas but is compatible with other definitions. Let me, for example, take your definition at face value. An object (or objects) have self-awareness if it has a model of itself. Now we can stop right here because to borrow your word, it’s incoherent. What does it mean to have a model? If an object has a model of itself, how can it know it has a model of itself?

Let me expand on the model thing. Normally a model in this usage is a structure that takes as input a representation of the state of some system and perhaps some extra data (a driving force, a stimulation, a perturbation, etc…) and then spits out what the state of that system would be as an effect of that extra data. A model consists of a syntax and semantics. The syntax would be the algorithm itself and the semantics would be the set of symbols or objects the syntax runs with. The semantics are interpreted and the system has no way of inferring their meaning. That’s the job of a user.

So let’s write a program. This program will generate infinite strings of integers (mod 10) that are completely random. To be sure it’s random each number could be decided by the internal reading of its temperature mod 10 or something like that. If this machine runs for infinite time it will produce every string possible. Of these strings there will be a several strings which are the axioms and various proofs of Peano arithmetic. However the only way to uncover this is to have a cipher. As a user you have to decide that certain numeric groupings and certain patterns (representing operations) are in a one to one correspondence with the semantics of Peano arithmetic. Godel provided one such cipher, but there are infinitely many consistent ciphers which will yield a full set of axioms and proofs of Peano arithmetic among your infinite sequence of integers.

However, your code doesn’t know the cipher. In fact there’s no way to give it a cipher. It doesn’t know Peano arithmetic, it’s not computing it. It’s doing something else. You’re projecting onto it with your cipher. All any algorithm is, is a convenient, efficient arrangement of the appropriate logical operations that with the right semantics line up with the desired model. But the algorithm can’t see your cipher, it can’t interpret it because it references objects outside of the machine’s own internal set of semantics. Your self aware code is not executed by a self-aware machine.

A more relatable I could provide is a personal experience of mine. I worked with a community theater to put on a play which was a parody of plays in general. The play was actually an exact model of itself and if you’re familiar with theater you’ll instantly pickup on that. It’s a repetition of the same story through the lens of different genres characterized by their tropes. However, a large amount of the participants were not familiar enough with theater to understand that (me included, I’m no theater buff). They took the play at face value. However any audience member familiar with theater would say the performers were self aware. But that’s not true, they demonstrably weren’t. So what was? The script? Well only when evaluated in the context of theater history which in this analogy is our cipher. We projected self awareness on the script and the performers using your definition where clearly there is none. The script isn’t self aware. The writers behind the script were but in this analogy they and the audience are the only conscious individuals involved. The performers and the script are part of the machine.

So in summary here’s the problem with your definition: Considering the various ciphers available, is something self-aware if there exists at least one cipher where it is a model of itself? Do all ciphers have to yield a self-model? I think a better definition would include that it not all models itself, but has a cipher for interpreting itself as a model of itself. It needs semantics. No computer with its set of semantics would self-interpret its task as the task we’ve given it. Likewise my model of my own brain is incomplete and inaccurate because my internal set of semantics do not correspond to the semantics of the tasks evolution selected my brain be capable of doing. In this analogy I’m not assuming evolution is conscious or anything like that, though by your definition I would have to conclude it is conscious as it contains a model of itself through our own theory of it.

Daniel

Ben: I wasn’t trying to make a jump to religious mysticism. Rather neurons are living cells made from complex biochemical molecules that exhibit qualia, mind, consciousness etc. On the other hand computer gates are made from simple inorganic molecules.

Daniel, at least we’re still sorta heading in the right direction.

“This program will generate infinite strings of integers (mod 10) that are completely random.”

That would requre a perpetual motion machine, for starters. And, as you note, it would generate the complete works of Shakespeare translated into monkey typing, plus an infinite number of Boltzmann brains. Sean has rather eloquently dismissed the problem with Boltzmann brains in his debates with Craig, so I don’t see any particular need to address them further.

If we instead consider a much more prosaic finite random number generator such as you might find on your smartphone, it’s obvious that there’s no consciousness. There’s no model of anything, no feedback loop, no I/O, and so on.

“Normally a model in this usage is a structure that takes as input a representation of the state of some system and perhaps some extra data (a driving force, a stimulation, a perturbation, etc…) and then spits out what the state of that system would be as an effect of that extra data.”

Great definition! Let’s run with it.

Imagine such a model designed to simulate, say, political candidates. It’s designed in such a way that it does a respectable (but, of course, not perfect) job at predicting a candidate’s answers to policy questions.

The folks at Goggle could probably generate such a model. And, if not today, maybe sometime in the not-too-distant future.

This program would not be self-aware.

Imagine that, independently, IBM’s Watson team develops a poilitical science advisor not unlike the medical advisor they’re already working on.

Neither today’s Watson nor this hypothetical PolySci Watson would be self-aware.

But…fuse the two together, and the result would be self-aware, once you asked it to analyze its own recommendations and compare them with those of others.

Cheers,

b&

vicp:

“Ben: I wasn’t trying to make a jump to religious mysticism. Rather neurons are living cells made from complex biochemical molecules that exhibit qualia, mind, consciousness etc. On the other hand computer gates are made from simple inorganic molecules.”

Alas, there’s no way to get to your conclusion about the specialness of carbon-based circuits as opposed to silicon-based ones without invoking dualism, vitalism, spiritualism, or some other form of supernatural mysticism.

b&

Ben you missed the point. The infinite length of the machine’s runtime isn’t what’s relevant. The point is that computer has an infinite sample space of which any output of a finite runtime has a nonzero probability of sampling a given string of numbers which under the right cipher contains the axioms and a finite selection of proofs of Peano arithmetic. Relaxing the infinite runtime assumption does not change the situation at all. Let it be a finite machine. This has nothing to do with Boltzmann brains and the dismissal of Boltzmann brains are on completely different logical grounds. I agree with Sean’s take on Boltzmann brains for the most part and yet it’s not the least bit relevant.

The machine is computing numbers which lay the foundation for its own representation, but only with the right cipher. Recall under our semantics the machine is simply spitting out random numbers. It requires a different cipher to claim it’s even doing arithmetic and of that set of ciphers with those claims there are a subset that interpret the program as doing arithmetic about arithmetic. So is this machine self-aware just because there is a valid interpretation of its string of numbers where it is self-aware? You have to answer the questions I’ve raised which you’ve dodged expertly as if you’re posting with a team of political advisors. Is it sufficient if there exists at least one cipher for an algorithm to be interpreted as self-aware or does every cipher have to interpret it as a self-aware algorithm?

Let’s continue working off your definition. Any system that models itself (still incoherent by the way, but let’s roll with it) is self aware. Well me + a bagel models itself so I guess this bagel and I form a joint consciousness. You and I have a model of not just ourselves but jointly we have a model of ourselves complete with us interacting. I guess you and I share a joint self-awareness in addition to our own. The universe has produced beings that have modeled it, well those beings are part of the universe so I guess the universe is self-aware. Under your definition everything is self-aware. I merely need to take the right union between sets and wa-la I can get self-awareness.

You might say all of these include humans, but I don’t have to include us in these sets. The core theory is all published work out there. All humans could die and as long as our publications still are out there, the universe has a model of itself. It doesn’t depend on our existence to maintain its self-awareness. It apparently also doesn’t matter that there’s nothing else in the universe to interpret that our publications are a model of the universe but I guess that’s not required for self-awareness.

Daniel

Daniel, The absurdities associated with the infinite are themselves infinite. Honesly, I really don’t care if your hypothesized peoetual motion machine would or wouldn’t be self-aware, any more than I care whether or not Harry Potter’s magic wand is.

I gave you a plausible model of a self-aware computer system not unreasonable with today’s technology. I find it most curious that you chose to completely ignore it, in favor of self-aware boiled-then-baked breads.

You would presumably be aware of the sensations of eating the bagel, but your attempt to extend the awareness of the computation inside your skull to the starches being dissolved in hydrochloric acid in your gut is beyond absurd. Have I ever given you reason to think that there’s any meaningful computation going on in your lower intestine? Or is this some subtle attempt on your part to tell me I’m talking out my ass?

b&

My machine isn’t infinite. My machine is finite and can be run for finite time. My machine, in fact, actually exists, people have already built it.

I’m taking on a new policy. I’m not taking on new challenges until old ones are resolved haha, it takes too much time to come up with examples when I have a completely appropriate one right here. Your model isn’t plausible and you dismiss the obvious counter example I provided based on a non sequitur. I can’t in good conscience continue on with a conversation when moving forward means accepting such a logical absurdity from my peer. Don’t bother yourself with infinities if they’re too incoherent (or absurd or whatever new term you have for general dismissal) for you, please answer the relevant questions for this finite machine. I will be happy to discuss further ideas once you’ve answered them.

Then don’t focus on the bagel example if it doesn’t suit your interest. I can’t tell a priori if you think digestion is a self aware computation or not because I have no idea what your criteria for semantics are in a self aware algorithm. There possibly exists a set of semantics where digestion is computing a model of digestion. This is post 3 of yours where you have not clarified that part of your definition. If another example I provided suits you better focus on my universe with no humans but all published core theory papers. That’s a less crude example anyway.

Daniel

Daniel,

You offered an excellent definition of a computational model that I liked. I even offered an example of how cutting-edge computer scientists could implement such a model today — my Google / Watson mashup example.

Now you’re going the exact opposite direction with random number generators and toast that thinks.

Demonstrate that a flipping coin or a sack of flower meets your own criteria for a model if you want me to take you seriously, or show how my Google / Watson mashup doesn’t. Or don’t, if you’re not interested in continuing the discussion.

b&

I’m not showing it meets my criteria. I showed it meets yours.

Daniel

Daniel, from the get-go I’ve described consciousness as a self-reflective model, and I’m even now agreeing with you that your concept of a model is a good one for this discussion. I have never even hinted that a random number generator is anything remotely like a model.

But now that it should be cleasr to you how consciousness can arise from such a model, you’re playing childish “I know you are. ut what am I?” games with tandom number generators that even you are acknowledging aren’t models.

So, this is likely my last response to you, unless you wish to engage with what I’ve actually written.

Cheers,

b&