I have often talked about the Many-Worlds or Everett approach to quantum mechanics — here’s an explanatory video, an excerpt from From Eternity to Here, and slides from a talk. But I don’t think I’ve ever explained as persuasively as possible why I think it’s the right approach. So that’s what I’m going to try to do here. Although to be honest right off the bat, I’m actually going to tackle a slightly easier problem: explaining why the many-worlds approach is not completely insane, and indeed quite natural. The harder part is explaining why it actually works, which I’ll get to in another post.

I have often talked about the Many-Worlds or Everett approach to quantum mechanics — here’s an explanatory video, an excerpt from From Eternity to Here, and slides from a talk. But I don’t think I’ve ever explained as persuasively as possible why I think it’s the right approach. So that’s what I’m going to try to do here. Although to be honest right off the bat, I’m actually going to tackle a slightly easier problem: explaining why the many-worlds approach is not completely insane, and indeed quite natural. The harder part is explaining why it actually works, which I’ll get to in another post.

Any discussion of Everettian quantum mechanics (“EQM”) comes with the baggage of pre-conceived notions. People have heard of it before, and have instinctive reactions to it, in a way that they don’t have to (for example) effective field theory. Hell, there is even an app, universe splitter, that lets you create new universes from your iPhone. (Seriously.) So we need to start by separating the silly objections to EQM from the serious worries.

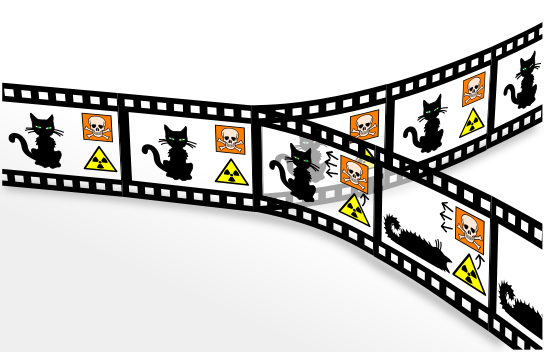

The basic silly objection is that EQM postulates too many universes. In quantum mechanics, we can’t deterministically predict the outcomes of measurements. In EQM, that is dealt with by saying that every measurement outcome “happens,” but each in a different “universe” or “world.” Say we think of Schrödinger’s Cat: a sealed box inside of which we have a cat in a quantum superposition of “awake” and “asleep.” (No reason to kill the cat unnecessarily.) Textbook quantum mechanics says that opening the box and observing the cat “collapses the wave function” into one of two possible measurement outcomes, awake or asleep. Everett, by contrast, says that the universe splits in two: in one the cat is awake, and in the other the cat is asleep. Once split, the universes go their own ways, never to interact with each other again.

And to many people, that just seems like too much. Why, this objection goes, would you ever think of inventing a huge — perhaps infinite! — number of different universes, just to describe the simple act of quantum measurement? It might be puzzling, but it’s no reason to lose all anchor to reality.

To see why objections along these lines are wrong-headed, let’s first think about classical mechanics rather than quantum mechanics. And let’s start with one universe: some collection of particles and fields and what have you, in some particular arrangement in space. Classical mechanics describes such a universe as a point in phase space — the collection of all positions and velocities of each particle or field.

What if, for some perverse reason, we wanted to describe two copies of such a universe (perhaps with some tiny difference between them, like an awake cat rather than a sleeping one)? We would have to double the size of phase space — create a mathematical structure that is large enough to describe both universes at once. In classical mechanics, then, it’s quite a bit of work to accommodate extra universes, and you better have a good reason to justify putting in that work. (Inflationary cosmology seems to do it, by implicitly assuming that phase space is already infinitely big.)

That is not what happens in quantum mechanics. The capacity for describing multiple universes is automatically there. We don’t have to add anything.

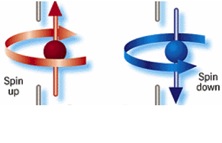

The reason why we can state this with such confidence is because of the fundamental reality of quantum mechanics: the existence of superpositions of different possible measurement outcomes. In classical mechanics, we have certain definite possible states, all of which are directly observable. It will be important for what comes later that the system we consider is microscopic, so let’s consider a spinning particle that can have spin-up or spin-down. (It is directly analogous to Schrödinger’s cat: cat=particle, awake=spin-up, asleep=spin-down.) Classically, the possible states are

The reason why we can state this with such confidence is because of the fundamental reality of quantum mechanics: the existence of superpositions of different possible measurement outcomes. In classical mechanics, we have certain definite possible states, all of which are directly observable. It will be important for what comes later that the system we consider is microscopic, so let’s consider a spinning particle that can have spin-up or spin-down. (It is directly analogous to Schrödinger’s cat: cat=particle, awake=spin-up, asleep=spin-down.) Classically, the possible states are

“spin is up”

or

“spin is down”.

Quantum mechanics says that the state of the particle can be a superposition of both possible measurement outcomes. It’s not that we don’t know whether the spin is up or down; it’s that it’s really in a superposition of both possibilities, at least until we observe it. We can denote such a state like this:

(“spin is up” + “spin is down”).

While classical states are points in phase space, quantum states are “wave functions” that live in something called Hilbert space. Hilbert space is very big — as we will see, it has room for lots of stuff.

To describe measurements, we need to add an observer. It doesn’t need to be a “conscious” observer or anything else that might get Deepak Chopra excited; we just mean a macroscopic measuring apparatus. It could be a living person, but it could just as well be a video camera or even the air in a room. To avoid confusion we’ll just call it the “apparatus.”

In any formulation of quantum mechanics, the apparatus starts in a “ready” state, which is a way of saying “it hasn’t yet looked at the thing it’s going to observe” (i.e., the particle). More specifically, the apparatus is not entangled with the particle; their two states are independent of each other. So the quantum state of the particle+apparatus system starts out like this:

(“spin is up” + “spin is down” ; apparatus says “ready”) (1)

The particle is in a superposition, but the apparatus is not. According to the textbook view, when the apparatus observes the particle, the quantum state collapses onto one of two possibilities:

(“spin is up”; apparatus says “up”)

or

(“spin is down”; apparatus says “down”).

When and how such collapse actually occurs is a bit vague — a huge problem with the textbook approach — but let’s not dig into that right now.

But there is clearly another possibility. If the particle can be in a superposition of two states, then so can the apparatus. So nothing stops us from writing down a state of the form

(spin is up ; apparatus says “up”)

+ (spin is down ; apparatus says “down”). (2)

The plus sign here is crucial. This is not a state representing one alternative or the other, as in the textbook view; it’s a superposition of both possibilities. In this kind of state, the spin of the particle is entangled with the readout of the apparatus.

What would it be like to live in a world with the kind of quantum state we have written in (2)? It might seem a bit unrealistic at first glance; after all, when we observe real-world quantum systems it always feels like we see one outcome or the other. We never think that we ourselves are in a superposition of having achieved different measurement outcomes.

This is where the magic of decoherence comes in. (Everett himself actually had a clever argument that didn’t use decoherence explicitly, but we’ll take a more modern view.) I won’t go into the details here, but the basic idea isn’t too difficult. There are more things in the universe than our particle and the measuring apparatus; there is the rest of the Earth, and for that matter everything in outer space. That stuff — group it all together and call it the “environment” — has a quantum state also. We expect the apparatus to quickly become entangled with the environment, if only because photons and air molecules in the environment will keep bumping into the apparatus. As a result, even though a state of this form is in a superposition, the two different pieces (one with the particle spin-up, one with the particle spin-down) will never be able to interfere with each other. Interference (different parts of the wave function canceling each other out) demands a precise alignment of the quantum states, and once we lose information into the environment that becomes impossible. That’s decoherence.

Once our quantum superposition involves macroscopic systems with many degrees of freedom that become entangled with an even-larger environment, the different terms in that superposition proceed to evolve completely independently of each other. It is as if they have become distinct worlds — because they have. We wouldn’t think of our pre-measurement state (1) as describing two different worlds; it’s just one world, in which the particle is in a superposition. But (2) has two worlds in it. The difference is that we can imagine undoing the superposition in (1) by carefully manipulating the particle, but in (2) the difference between the two branches has diffused into the environment and is lost there forever.

All of this exposition is building up to the following point: in order to describe a quantum state that includes two non-interacting “worlds” as in (2), we didn’t have to add anything at all to our description of the universe, unlike the classical case. All of the ingredients were already there!

Our only assumption was that the apparatus obeys the rules of quantum mechanics just as much as the particle does, which seems to be an extremely mild assumption if we think quantum mechanics is the correct theory of reality. Given that, we know that the particle can be in “spin-up” or “spin-down” states, and we also know that the apparatus can be in “ready” or “measured spin-up” or “measured spin-down” states. And if that’s true, the quantum state has the built-in ability to describe superpositions of non-interacting worlds. Not only did we not need to add anything to make it possible, we had no choice in the matter. The potential for multiple worlds is always there in the quantum state, whether you like it or not.

The next question would be, do multiple-world superpositions of the form written in (2) ever actually come into being? And the answer again is: yes, automatically, without any additional assumptions. It’s just the ordinary evolution of a quantum system according to Schrödinger’s equation. Indeed, the fact that a state that looks like (1) evolves into a state that looks like (2) under Schrödinger’s equation is what we mean when we say “this apparatus measures whether the spin is up or down.”

The conclusion, therefore, is that multiple worlds automatically occur in quantum mechanics. They are an inevitable part of the formalism. The only remaining question is: what are you going to do about it? There are three popular strategies on the market: anger, denial, and acceptance.

The “anger” strategy says “I hate the idea of multiple worlds with such a white-hot passion that I will change the rules of quantum mechanics in order to avoid them.” And people do this! In the four options listed here, both dynamical-collapse theories and hidden-variable theories are straightforward alterations of the conventional picture of quantum mechanics. In dynamical collapse, we change the evolution equation, by adding some explicitly stochastic probability of collapse. In hidden variables, we keep the Schrödinger equation intact, but add new variables — hidden ones, which we know must be explicitly non-local. Of course there is currently zero empirical evidence for these rather ad hoc modifications of the formalism, but hey, you never know.

The “denial” strategy says “The idea of multiple worlds is so profoundly upsetting to me that I will deny the existence of reality in order to escape having to think about it.” Advocates of this approach don’t actually put it that way, but I’m being polemical rather than conciliatory in this particular post. And I don’t think it’s an unfair characterization. This is the quantum Bayesianism approach, or more generally “psi-epistemic” approaches. The idea is to simply deny that the quantum state represents anything about reality; it is merely a way of keeping track of the probability of future measurement outcomes. Is the particle spin-up, or spin-down, or both? Neither! There is no particle, there is no spoon, nor is there the state of the particle’s spin; there is only the probability of seeing the spin in different conditions once one performs a measurement. I advocate listening to David Albert’s take at our WSF panel.

The final strategy is acceptance. That is the Everettian approach. The formalism of quantum mechanics, in this view, consists of quantum states as described above and nothing more, which evolve according to the usual Schrödinger equation and nothing more. The formalism predicts that there are many worlds, so we choose to accept that. This means that the part of reality we experience is an indescribably thin slice of the entire picture, but so be it. Our job as scientists is to formulate the best possible description of the world as it is, not to force the world to bend to our pre-conceptions.

Such brave declarations aren’t enough on their own, of course. The fierce austerity of EQM is attractive, but we still need to verify that its predictions map on to our empirical data. This raises questions that live squarely at the physics/philosophy boundary. Why does the quantum state branch into certain kinds of worlds (e.g., ones where cats are awake or ones where cats are asleep) and not others (where cats are in superpositions of both)? Why are the probabilities that we actually observe given by the Born Rule, which states that the probability equals the wave function squared? In what sense are there probabilities at all, if the theory is completely deterministic?

These are the serious issues for EQM, as opposed to the silly one that “there are just too many universes!” The “why those states?” problem has essentially been solved by the notion of pointer states — quantum states split along lines that are macroscopically robust, which are ultimately delineated by the actual laws of physics (the particles/fields/interactions of the real world). The probability question is trickier, but also (I think) solvable. Decision theory is one attractive approach, and Chip Sebens and I are advocating self-locating uncertainty as a friendly alternative. That’s the subject of a paper we just wrote, which I plan to talk about in a separate post.

There are other silly objections to EQM, of course. The most popular is probably the complaint that it’s not falsifiable. That truly makes no sense. It’s trivial to falsify EQM — just do an experiment that violates the Schrödinger equation or the principle of superposition, which are the only things the theory assumes. Witness a dynamical collapse, or find a hidden variable. Of course we don’t see the other worlds directly, but — in case we haven’t yet driven home the point loudly enough — those other worlds are not added on to the theory. They come out automatically if you believe in quantum mechanics. If you have a physically distinguishable alternative, by all means suggest it — the experimenters would love to hear about it. (And true alternatives, like GRW and Bohmian mechanics, are indeed experimentally distinguishable.)

Sadly, most people who object to EQM do so for the silly reasons, not for the serious ones. But even given the real challenges of the preferred-basis issue and the probability issue, I think EQM is way ahead of any proposed alternative. It takes at face value the minimal conceptual apparatus necessary to account for the world we see, and by doing so it fits all the data we have ever collected. What more do you want from a theory than that?

Daniel Kerr says:

July 7, 2014 at 8:47 am

Bob, you can make normative statements about how science ought to be as much as you like, but if only its utility is logically/empirically justified and not its ability to produce a fundamental, ontological understanding of nature, I think we ought only operate under the assumption it is useful and not that it’s “true.” You could say I’m an empiricist like that.

__________________

I would never expect science to give any absolute ontological understanding, and all our knowledge to be reliable must of course be based on empirical inquiry and any logically supported consequences that result. But this doesn’t mean that it makes sense to take the position that our successful models have nothing to say about ontology. Bohr took physics down a very unproductive path with regard to the relationship between the quantum and classical worlds, it took over 30 years to recover from the incoherent viewpoint of Copenhagen.

Bob: I don’t think the point (“the point”, even if Bohr et al made some overly strong generalizations about it) of CI was to treat all models as merely heuristic. Rather, the issue was the specific difficulty of finding a reasonable model or “picture” of things in the case of quantum phenomena. It really is difficult to reconcile what we really observe (which of course includes the specific probabilities, not just the bare fact of exclusive outcomes) with the wave function which is postulated as an explanatory bridge between actual observations. Remember that we can’t really detect the various alleged amplitudes of a single WF in space, as if we were verifying Maxwell’s equations. We think that “must be” the way it is in order to explain results, but that already works back from the need to reconcile extension-based interference based on ensemble detections with the finding of “the particle” (or photon …) as detected at a given spot later. They did the best they could, trying to picture the situation just doesn’t work out well for familiar reasons. That is the key, not an a priori attitude about philosophy of science.

You talk about recovering from their “incoherent viewpoint”, but the purported MWI alternative doesn’t even comport well with the most basic task of predicting the squared-amplitude probabilities we find. Note that the “structure” of the WF superpositions and distributions is inherently inconsistent with amplitude dependency. Various preposterous kludges are proposed such as “thicknesses” and various other widely-criticized dodges of the fundamental measure problem here. Nothing wrong with *trying*, it’s just a lousy attempt – and no more productive than CI, is it? (No particular alternative predictions.) It comes down to: the universe just doesn’t want to “play ball” you might say, however much certain people think it just “ought to” … (why should it?) – as noted in his own words by Richard Feynman.

I agree the Copenhagen interpretation has done damage to physics understanding in general. Talk to any physics undergraduate or graduate student that does not work with quantum physics and the damage is self-evident. But I don’t think the damage originated in the Copenhagen view on the ontology of QM, but rather their intuitive compromises to make a seemingly non-ontological theory connect with our intuitive notion of physical ontology.

I agree that we should treat our models as if they’re true, as if they’re ontological. It makes sense to treat the Higgs Boson as a real object rather than an abstract concept organizing disjointed sensory data. But once we start talking about interpretations of quantum mechanics that imply a specific ontology and are unconstrained by empirical results, then I think it’s a more dangerous game to play. I’d rather assume the theory is completely non-ontological and choose the interpretation with the simplest ontology to it rather than make any normative statements on what that ontology should be (with the exception of ones that ought be physically consistent obviously).

I think the ensemble interpretation is the minimum ontology interpretation with some consistent histories-type approach connecting it to classical intuition. This is rejected by some because the wavefunction itself is not considered to be ontological, but given its clear probabilistic properties, I think that’s acceptable.

Response to Neil Bates

______________

I think you’re ignoring all the progress actually made in understanding the classical to quantum transition, once it wasn’t career suicide to question the holy writ from Denmark. I really wish everyone interested read Peter Bryne’s book ” The Many Worlds of Hugh Everett III” I have quotes some of this in this forum. Here’s a good quote.

______________________

From ” The Many Worlds of Hugh Everett III” by Peter Byrne

Hartle and Gell-Mann credited Everett with suggesting how to apply quantum mechanics to cosmology. They considered their “Decohering sets of Histories” theory as an extension of his work. Using the Feynman path integrals , they painted a picture of the initial conditions of the universe when it was quantum mechanical. Their method treats the Everett “worlds” as histories giving definite meaning to Everett branches. They assign probability weights to possible histories of the Universe , and importantly , include observers in the wave function.

Hartle declines to state whether or not he considers the branching histories outside the one we experience to be physically real, or purely computational. And he says that “predictions and tests of the theory are not affected by whether or not you take one view or the other.”

Everett , of course, settled for describing all of the branches as “equally real, ” which , given that our branch is real, would mean that all branches are real.

Conference participant, Jonathan J Halliwell of MIT, later wrote an article Scientific American ” Quantum Cosmology and the Creation of Universes” He explained that cosmologists own Everett a debt for opening the door to a completely quantum universe. The magazine ran photographs of the most important figures in the history of quantum cosmology; Schrodinger, Gamow, Wheeler, De Witt, Hawking, and Hugh Everett III , a student of Wheeler in the 1950’s at Princeton who solved the observer -observed problem with his many worlds interpretation.

End Quote

Daniel Kerr says:

Bob, you can make normative statements about how science ought to be as much as you like, but if only its utility is logically/empirically justified and not its ability to produce a fundamental, ontological understanding of nature, I think we ought only operate under the assumption it is useful and not that it’s “true.” You could say I’m an empiricist like that.

______________

Utility is fine for engineers but I don’t find it sufficient for Scientists. Of course we mustn’t be too naive about how close our models get to objective reality, but if Utility were really the only criteria , a lot of science wouldn’t be done.

Bob, that apparent “progress” about the transition really isn’t. I and others have gone over why the decoherence argument is fallacious (again, briefly: from wrongly equating optical-type “interference” with broadly causal “interference,” and the pivotal density matrix is confusingly pre-loaded with measurement-based probabilities.) And if there is no alternative prediction (other than the implied *wrong* prediction of other than the actual Born statistics), what’s the point? Try reading up on the various critiques. The cosmology issue has no clear grounding, it’s all speculative as far as the connection to various interpretations goes. Finally, saying “Everett … solved the observer -observed problem with his many worlds interpretation” is unwarranted hagiography about a deservedly controversial claim.

Bob, I believe we should strive for it and a good theory should aim to achieve it, but we shouldn’t treat our interpretations as if they’ve reached such a status unless they do. If I didn’t believe this was a good goal I wouldn’t be concerned with interpretations now, would I? Haha, so obviously I agree with you in spirit.

As for many worlds, treating each world ontologically “real” doesn’t make any sense to me when we have the formalism of modal logic to categorize such worlds. However, I’m still unclear whether many world proponents argue: 1) Each universe in the multiverse has a defined state which contributes to the overall multiverse superposition, or 2) Each universe in the multiverse samples dynamical values from the distribution described by the multiverse wavefunction. The two arguments are really quite different as the former has the preferred basis problem while the latter does not. The latter is quite a different theory though as the states would have to be emergent from the dynamical values that index each universe.

Sean Carroll says: “If the particle can be in a superposition of two states, then so can the apparatus.”

Why?

If something is a superposition of two possibilities, then so can be something else? Where is the logic in that? Maybe, sometimes, indeed, depending. But not in general. Moreover, we are talking here about things of completely different natures: the particle is being measured. And it’s measured by an “apparatus”.

One is Quantum, the particle. The other is classical, the “apparatus”. In other words, Sean insists that Quantum = Classical.

Sorry, Sean. Science comes from the ability of scindere, to cut in two, to make distinctions.

An interesting aside: the philosopher Heidegger, maybe inspired by some of the less wise, contemporaneous statements of Bohr and his followers, insisted that the distinction between “subject” and “object” be eradicated. Unsurprisingly, he soon became a major figure of Nazism, where he was able to apply further lack of distinctions.

It seems to me that confusing the subject, the “apparatus”, and the object, the particle, is a particular case of the same mistake.

Neil Bates writes

Bob, that apparent “progress” about the transition really isn’t. I and others have gone over why the decoherence argument is fallacious (again, briefly: from wrongly equating optical-type “interference” with broadly causal “interference,” and the pivotal density matrix is confusingly pre-loaded with measurement-based probabilities.)

_____________

Sorry but once the claim is made that the empirically supported theory of Decoherence is fallacious, there’s really nothing left to discuss.

Bob, it’s not that decoherence doesn’t happen or that it isn’t sufficient to explain how classical probabilities result from quantum probabilities, it’s that it isn’t necessary. See this paper for example: http://link.springer.com/article/10.1007/s10701-008-9242-0#page-1

@kashyap vasavada

July 7, 2014 at 4:52 am

1) How confidant are you that we have eliminated an electromagnetic source as a possible mechanism for repulsion between galaxies? We do have radio telescopes but have we analyzed the entire electromagnetic spectrum in fine detail?

2) I have read about pulsars, however, is it possible to observe a single neutron in space? Would you be able to explain angular resolution to me?

3) Why is everyone chasing this grand unified theory? I understand that previous forces have been unified in the past, but I do not drive to my local college campus physics library using my rear view mirror.

4) Why is there more matter than antimatter? If there is an equal chance between matter and antimatter then why would one type exist instead of a universe of only radiation and no matter or antimatter?

Many worlds may be right…..but anyone who subscribes to the Copenhagen interpretation needs to think about the moment of conception. No one is present, there is no observer, the egg and the sperm are so tiny, there are millions of sperm and only one gets inside the egg….and from then on, things unfold without an observer present and has been that way from the dawn of time and any of the technology of today. So, where is the wave function collapse happening and how?

The point being no one has any clue something has happened for quite some time…days, weeks! And whatever happened inside the womb developed through cell division one step at a time in an extremely complicated process from specific instructions ….. Things are on autopilot, except for nourishment! So, somehow I really doubt that any other quantum mechanics interpretation other than MW can allow for this and even MW could face some legitimate questions in this situation! This despite the fact that DNA itself is now known to be rooted in complex quantum effects in the double helix!

I have one question which has alrady been asked if how do m,as energy conservation laws fit into this – where does the mass come from each time a universe splits? Is anybody willing to give a short idiot level answer to this please?

What if a subsystem of the universe makes a phase transition ? There ain’t no superposition of phases (see superselection rules, etc.)

And what if any observational act is related to a phase transition ? (There is even a brain model based on this idea).

Bob Zanelli, you wrote:

Sorry but once the claim is made that the empirically supported theory of Decoherence is fallacious, there’s really nothing left to discuss.

Yes, there is: whether you knew what the claim was in the first place. I think you are confusing whether decoherence exists and the relational effect it has on outcomes per se, with the argument of the decoherence interpretation, that purports to explain that, and why, decoherence leads to exclusive outcomes and states that can’t interact at all with each other. But the argument does not accomplish the latter task, that is what I meant (which should have been clear from both context and details.) Advice: if you think someone means to deny actual established facts, consider that you misunderstand them instead. (The exception being MWI supporters who claim there are states existing that we can’t possibly “find”.)

@Random dx/dt tangent: As a retired physics professor I would of course enjoy explaining whatever physics I know to inquisitive people. But there is only a limited amount one can explain without equations and without a chalk board. So the best recourse for you may be the nearby campus.

Answer to your question about why there is more matter than antimatter is largely unknown, although there are models based on CP violation. In fact if someone can figure this out, there is a guaranteed Nobel prize waiting for him/her.

“Answer to your question about why there is more matter than antimatter is largely unknown, although there are models based on CP violation. In fact if someone can figure this out, there is a guaranteed Nobel prize waiting for him/her.”

Our universe is a larger version of a black hole polar jet.

Matter is moving outward and away from the Universal jet emission point.

There is directionality to matter in the universe which refutes the big bang and is evidence of the universal jet.

There is a spin about a preferred axis which refutes the big bang and is evidence of the universal jet.

Dark energy is dark matter continuously emitted into the universal jet.

It’s not the big bang it’s the big ongoing.

Everett told his son to throw him out in the garbage when he dies. At least we know that is true.

But what would also be true is there is an infinite amount of Carrolls that, at this very moment would look at the same world we live in and then come think multiverse is absurd–making this Carrolls opinion pointless. If thats the price you need to pay to support your worldview, I say get out the hefty bags.

The MWI makes sense in many ways, especially given the notion of a pointer basis. But it seems like the notion of probability is not entirely well-defined either. Basically, you need to have some kind of measure on the space of universes and that seems to be quantum++ (if you will). I am willing to buy quantum++, but it would seem to require an extra assumption with regard to measurements. Once that happens, it is not clear why MWI is better than, say, assuming that the universe is fundamentally probabilistic and that these probabilities are naturally complex. Then wave function collapse is just the process by which a “35% chance a spin up photon” becomes “that photon was either spin up or spin down.”

To people that actually know about this: is there a natural way to interpret probabilities without additional assumptions in MWI?

Cmt, others can speak for themselves, but here is my take on your question: the proper Born statistics surely do not come naturally out of MWI. It’s not a neutral “mystery” for it. The structure of continuing superpositions is inherently inimical to frequentist Born probabilities. The “structure” is the same regardless of the amplitudes, but the amplitudes squared are the actual basis for probabilities.

Well, you can claim that wrong stats aren’t the “necessary” outcomes of that “interpretation” (and, with those implications, it really counts as a “theory” after all ….), but they are the straightforward suggested outcome in advance of twiddling with contrivances. Some of the latter are clunky and baffling, like pretending that the more frequent stream is “thicker” or “more real”, whatever that means. If MWI needs all that trouble and still can’t make a decent case for the most fundamental trait of quantum statistics, then it should be considered suspect until a clear and convincing case can be made that it does really predict BPs.

BTW I think I had a cookie glitch, accounting for the alternative avacon (that may be a silly Neilogism; avatar-icon, but thought I’d try it out. Note, it is also the name of a company, a very interesting one.)

Dr. Carroll,

I’ve read and much enjoyed your “From Eternity to Here” and I found myself agreeing (in a presumptuous layperson’s way) with your conclusions about Many-

Worlds. But after reading this post, and today observing a tree in a fairly strong wind with the many leaves twisting this way and that, I wonder if it is necessary for a new world to be created for every alternate possible twist of the many leaves? If so then the number of worlds must be infinite– and infinite the number of particles–since every “occurrence” could be considered as “observed”.

Here’s another complication: “the wave function” we are able to project the evolution of, is itself based on a previous measurement/collapse/preparation event. So then, what is the entire “original” WF? If we consider a new sub-WF for each apparent “observation”, do we then have “sub-sets” of associated WFs, and doesn’t that spoil both the idea of the superfluity of “measurements” as well as the simplicity of a big set of superpositions? (And, you can break down a wave into many different choices of components anyway, compare vectors.) I’m sure someone has offered answers, what and how good are they?

Neil, I think all this tells you is that you cannot necessarily exactly infer the quantum state that is “prepared” in a past big bang event. It makes sense, if you only have the projected/filtered data, you cannot infer the larger data set from which it originates.

I’m not sure how important it is that you need to know that wavefunction unless you’re interested in replicating the universe’s conception down to the quantum precision of its “setup,” if that’s even a sensible concept.

Dan, being able to know that wave function per se is not my essential point. (And as you know, we can’t easily “know a WF” anyway, given the practical measurement problems, aside from “what’s really going on.”) What I mean is: the situations that we consider to be generative of “new” WFs, are themselves the result (or can be) of previous quantum experiments. So, consider “the” current WF, about which we conjecture: “will the different components of the superposition, continue to evolve separately despite a measurement ‘seeming’ to select out one of them?” (Never mind that the argument for why lack of coherence would actually keep them causally separated, wrongly conflates having an evidentiary pattern of amplitudes, versus not; with “interaction” in the general sense ….) Well, that WF should itself already generated as one of the possible *wave functions* that could be produced under different circumstances. So then, again: do we have a sort of sub-grouping of superposition components? It would be a sort of infinite “nesting” of superpositions of superpositions, wouldn’t it? How does that work? Indeed, is this intelligible and integrable into quantum theory?

(PS, hint: I’m not looking to innocently “find out” things for the sake of knowledge, I am trying to catch the MWI in problems and to imply it’s not such an elegant idea, after all.)