Thanksgiving

This year we give thanks for an idea that establishes a direct connection between the concepts of “energy” and “information”: Landauer’s Principle. (We’ve previously given thanks for the Standard Model Lagrangian, Hubble’s Law, the Spin-Statistics Theorem, conservation of momentum, effective field theory, the error bar, and gauge symmetry.)

Landauer’s Principle states that irreversible loss of information — whether it’s erasing a notebook or swiping a computer disk — is necessarily accompanied by an increase in entropy. Charles Bennett puts it in relatively precise terms:

Any logically irreversible manipulation of information, such as the erasure of a bit or the merging of two computation paths, must be accompanied by a corresponding entropy increase in non-information bearing degrees of freedom of the information processing apparatus or its environment.

The principle captures the broad idea that “information is physical.” More specifically, it establishes a relationship between logically irreversible processes and the generation of heat. If you want to erase a single bit of information in a system at temperature T, says Landauer, you will generate an amount of heat equal to at least

![]()

where k is Boltzmann’s constant.

This all might come across as a blur of buzzwords, so take a moment to appreciate what is going on. “Information” seems like a fairly abstract concept, even in a field like physics where you can’t swing a cat without hitting an abstract concept or two. We record data, take pictures, write things down, all the time — and we forget, or erase, or lose our notebooks all the time, too. Landauer’s Principle says there is a direct connection between these processes and the thermodynamic arrow of time, the increase in entropy throughout the universe. The information we possess is a precious, physical thing, and we are gradually losing it to the heat death of the cosmos under the irresistible pull of the Second Law.

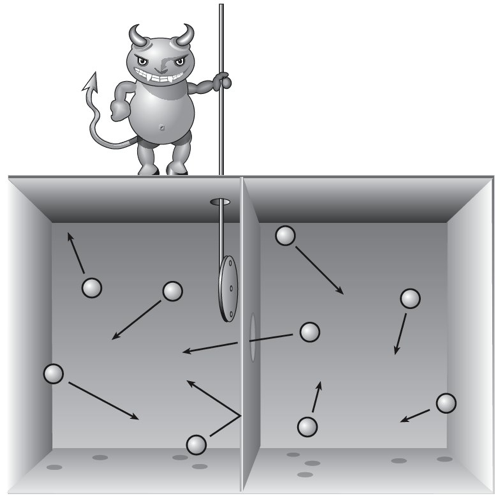

The principle originated in attempts to understand Maxwell’s Demon. You’ll remember the plucky sprite who decreases the entropy of gas in a box by letting all the high-velocity molecules accumulate on one side and all the low-velocity ones on the other. Since Maxwell proposed the Demon, all right-thinking folks agreed that the entropy of the whole universe must somehow be increasing along the way, but it turned out to be really hard to pinpoint just where it was happening.

The answer is not, as many people supposed, in the act of the Demon observing the motion of the molecules; it’s possible to make such observations in a perfectly reversible (entropy-neutral) fashion. But the Demon has to somehow keep track of what its measurements have revealed. And unless it has an infinitely big notebook, it’s going to eventually have to erase some of its records about the outcomes of those measurements — and that’s the truly irreversible process. This was the insight of Rolf Landauer in the 1960’s, which led to his principle.

A 1982 paper by Bennett provides a nice illustration of the principle in action, based on Szilard’s Engine. Short version of the argument: imagine you have a piston with a single molecule in it, rattling back and forth. If you don’t know where it is, you can’t extract any energy from it. But if you measure the position of the molecule, you could quickly stick in a piston on the side where the molecule is not, then let the molecule bump into your piston and extract energy. The amount you get out is (ln 2)kT. You have “extracted work” from a system that was supposed to be at maximum entropy, in apparent violation of the Second Law. But it was important that you started in a “ready state,” not knowing where the molecule was — in a world governed by reversible laws, that’s a crucial step if you want your measurement to correspond reliably to the correct result. So to do this kind of thing repeatedly, you will have to return to that ready state — which means erasing information. That decreases your phase space, and therefore increases entropy, and generates heat. At the end of the day, that information erasure generates just as much entropy as went down when you extracted work; the Second Law is perfectly safe.

The status of Landauer’s Principle is still a bit controversial in some circles — here’s a paper by John Norton setting out the skeptical case. But modern computers are running up against the physical limits on irreversible computation established by the Principle, and experiments seem to be verifying it. Even something as abstract as “information” is ultimately part of the world of physics.